Session Replay vs. Usability Testing: Uncovering the Why Behind User Behavior

Anna, a lead product manager at a B2B SaaS company, is responsible for improving the onboarding flow of a financial dashboard. Her goal? Reduce friction and improve user activation.

A week after launching an updated step-by-step onboarding guide, she checks session replays to see how users engage with the new experience.

The numbers tell an interesting story:

- 40% of users drop off at Step 3 (where users select their financial preferences).

- Users repeatedly hover over the “Risk Selection” section but don’t click.

- Many rage-click “Next” before abandoning the process altogether.

Anna has found out what is happening, but she still doesn’t know why it’s happening.

💡 Session replay tells her that users hesitate at Step 3, but not whether they are confused, frustrated, or distrustful of the interface.

To bridge this gap, she runs a usability test with 8 participants, using a controlled setup where she can track both verbal and non-verbal reactions while collecting quantitative metrics such as:

- Task success rate: Only 50% of users completed onboarding.

- Time on task: Users spent an average of 2 minutes struggling at Step 3.

- Error rate: 25% of users selected the wrong risk levels but didn’t notice.

- Satisfaction score: The onboarding experience was rated 58, far below the acceptable usability benchmark of 70+).

By observing participants in a controlled setting, Anna discovers the real issue:

- Users don’t understand how risk selection affects their financial profile—the interface lacks clarity.

- The UI doesn’t confirm their selection, leaving users uncertain if they’ve made the right choice.

- Verbal feedback confirms hesitation—participants explicitly mention the fear of making an irreversible mistake.

🔑 Key insight: Session replay shows friction patterns at scale. Usability testing isolates why they occur—by capturing user reasoning, decision-making strategies, and confidence levels in a controlled setting.

Try Userpilot Now

See Why 1,000+ Teams Choose Userpilot

What session replay captures (and what it doesn’t)

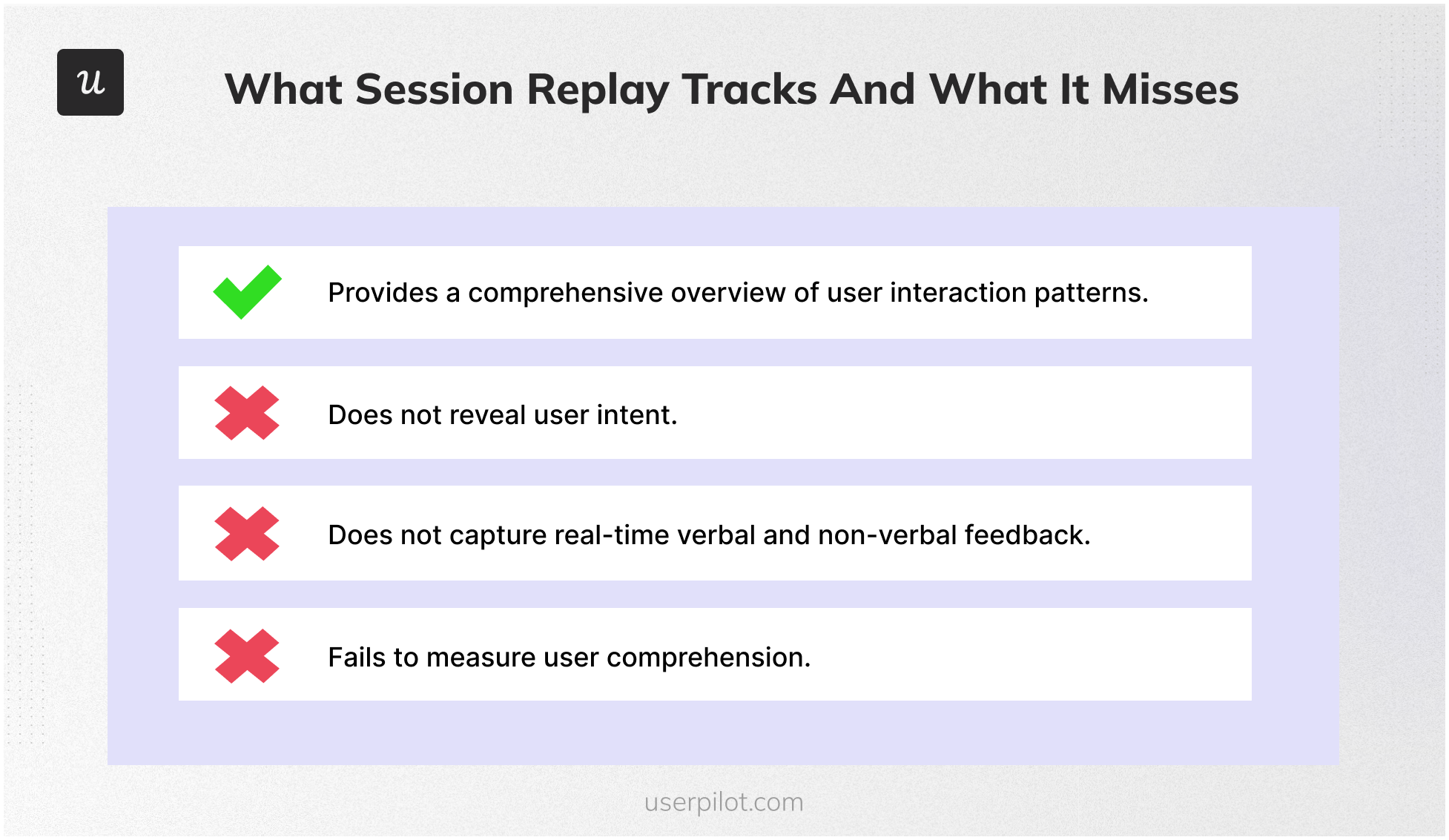

Session replay tools provide a high-level behavioral overview of user interactions by capturing:

- Clickstreams and mouse movements → where users click, hover, and scroll.

- Rage clicks and dead clicks → Frustration signals when users repeatedly click unresponsive elements.

- Time on task and drop-offs → Tracks where users hesitate or abandon a process.

📌 Example: In the financial dashboard onboarding scenario above, session replay detects that users hover over a tooltip icon without clicking—but it doesn’t explain why.

Where session replay falls short

- Cannot capture user intent → It shows hesitation, but not whether users are confused, distrustful, or distracted.

- Lacks real-time verbal and non-verbal feedback → You don’t know if users are thinking “I don’t trust this” or just pausing momentarily.

- Does not measure comprehension → Users may progress through a form without truly understanding the choices they’ve made.

The advantage of usability testing: Tracking the why in a controlled environment

Unlike session replay, usability testing is not just about observation—it allows researchers to:

- Control the testing environment to isolate variables affecting user behavior.

- Track verbal and non-verbal behaviors (e.g., eye movements, pauses, hesitations, body language).

- Measure both qualitative and quantitative usability performance, which I’ll cover in detail below.

Learn More from the Interview with Alessio Romito.

Quantitative usability metrics

- Task completion rate → Percentage of users who successfully complete an action.

- Time on task → Tracks efficiency, but also hesitation patterns.

- Error rate → Indicates incorrect actions or failed attempts.

- Satisfaction scores (SUS, SEQ, UMUX-LITE) → Measures ease of use, confidence, and frustration.

- Problem occurrence rate → Tracks how frequently usability issues arise.

Qualitative usability metrics

- Hesitation and pauses → Measures uncertainty and cognitive load (e.g., long pauses before an action).

- Facial expressions and body language → Indicates frustration, confusion, or confidence.

- Think-aloud protocol feedback → Captures real-time verbalized thoughts while completing a task.

- Expectation mismatch → Detects when user expectations don’t align with system behavior (e.g., “I thought clicking this would take me to X, but it didn’t”).

- Confidence and trust signals → Captured through post-task reflections, assessing how sure a user was about their action.

📌 Example: Coming back to the original example, session replay showed that users dropped off at Step 3, while usability testing revealed:

- Long pauses before risk selection (hesitation).

- Confused facial expressions as users scrolled back up.

- Users verbalized uncertainty—saying things like “I don’t know if I’m making the right choice here.”

To fix these issues, Anna added a clear explanation under each risk level and a final confirmation message, instead of just tweaking UI colors. The impact? Completion rates increased by 20%, and satisfaction scores rose from 58 to 74.

Session replay vs. usability testing: A complete comparison

| Metric Type | Session Replay | Usability Testing |

| Tracking behavioral trends at scale | ✅ Best for analyzing thousands of sessions | ❌ Not scalable |

| Measuring completion rates, error rates, and time on task | ✅ Tracks passive metrics | ✅ Captures metrics in a controlled setting |

| Identifying rage clicks, dead clicks, scrolling friction | ✅ Detects frustration signals | ❌ Not primary focus |

| Understanding why users hesitate | ❌ Cannot determine intent | ✅ Captures hesitation and cognitive load |

| Measuring confidence, trust, and expectations | ❌ Cannot assess trust issues | ✅ Captures decision-making cues |

| Tracking facial expressions and body language | ❌ Not possible | ✅ Observed in controlled settings |

| Evaluating comprehension and learning barriers | ❌ Cannot assess user understanding | ✅ Think-aloud protocol and expectation mismatch |

| Testing new designs before launch | ❌ Limited to live UI | ✅ Controlled pre-launch usability tests |

💡 Key takeaways

- Session replay is great for detecting macro-level friction.

- Usability testing is essential for diagnosing behavioral and cognitive barriers.

How to integrate both methods for maximum UX impact?

- Step 1: Use session replay to identify behavior trends → Look for high-drop off points, repeated clicks, and hesitation patterns.

- Step 2: Conduct usability testing to isolate user intent → Test problematic areas with task-based usability testing and capture verbal and non-verbal feedback.

- Step 3: Implement changes and track post-fix behavior with session replay → Measure improvement in completion rates, hesitation time, and error reduction.

Conclusion: The power of combining behavioral analytics and controlled research

Here are some key lessons you should take away from this article:

- Session replay tracks usability at scale but lacks cognitive insights.

- Usability testing isolates reasoning and decision-making behaviors.

- Verbal and non-verbal cues add essential context to user frustration.

- Combining both methods ensures a complete UX research approach.

👉🏻 Final thought: Quantitative data shows friction. Controlled research reveals intent. The best UX strategies leverage both.

Don’t Miss Out on Expert Knowledge That Keeps You Ahead.