Should User Research Be Left at the Mercy of AI?

Every time I begin a new project, I am faced with a dilemma: Should I keep it “in-house” and develop the best possible solution with my team or send a request to the user research department?

If I choose the latter, I’d need to expect a delay in starting the development by weeks and months before they come back with an answer. It’s understandable, though—they are swamped with work, and there are only so many researchers available.

Thus, it’s no surprise that managers turn their eyes to AI, particularly AI agents, hoping those systems can speed up and enrich the user research project. Will they? And can they?

The famous quote from the movie Jurrasic Park comes to mind:

“Your scientists were so preoccupied with whether they could, they didn’t stop to think if they should.”

So, let’s not let the AI agent’s “dinos” roam the Earth freely, and let’s stop questioning whether user research should be left entirely to AI. Let me share my thoughts.

Is it possible to hand over user research to AI?

Let me start by saying I will skip the moral and societal impact of replacing even part of the specialized workforce with bots. I’m only interested in whether it’s feasible to expect this to happen and how it will impact the quality of the research result.

Can we create better products when we pass on the work to the machines?

So far, that hasn’t happened, and no products exist that would use AI Agents to perform end-to-end user research. AI is incorporated into existing, user-operated pieces of software, but it’s only bits and bobs scattered between different programs and elements of the research process. However, what will the future bring?

Well, some say we have reached the peak of LLM capacities and there is no more learning data left that can be used to improve it.

Others proclaim that a so-called “singularity,” the emergence of a superior Artificial General Intelligence, is just around the corner and will be able to do anything. There is also Satya Nadela, CEO of Microsoft, who recently speculated that SaaS is about to be replaced by upcoming AI agents.

I can see what he meant. After all, SaaS, or any other software for that matter, is simply an interface between a human mind and a code solving a problem. Satya is picturing a future where you are a boss who assigns tasks to employees (AI agents), and you don’t really care how they are carried out or what tools are used.

Only the results matter.

It’s not crazy to picture a future where the UI level is no longer needed, and a code interacts with other code directly to achieve results. That can easily translate to user research.

That being said, all my AI experience so far (agents or otherwise) is that those tools can get to 80-90% of where you need to be. After that, the human hand needs to take over to make the outcome as envisioned.

You could argue that this will only get better in time, but it’s not a guarantee. I believe that SaaS will transform massively and become much more effective, but saying it’s about to become obsolete is a step too far.

With that, let’s return to the main focus: Will AI Agents replace human researchers? To give you a clear answer, let’s break down the different research techniques that this theoretical piece of software should cover.

I’ll start with Satya’s vision of almost UI-less software and propose we interact with our “AI Research Suite” with a simple prompt, i.e., “Find me the best way to encourage the user to share their personal data”.

Here, the agent could ask a few follow-up questions or intake additional data, but we want to give the bot the assignment, leave it, relax, and pick up the results when available.

Can the agents use all the available methods successfully? Let’s see:

Quantitative research

These include:

- Surveys/questionnaires

- A/B testing

- Analytics review

- Card sorting

- Heatmaps

- Eye-tracking studies

Where AI truly shines is in eliminating the grunt work: analyzing Terabytes of data, cross-referencing usage metrics, grouping feedback thematically, and providing initial analysis. For that reason, we are not only seeing AI being introduced to most research tools, but we can be sure that AI will handle more and more of the work better.

Depending on the quality and reliability of the results, AI agents can potentially replace researchers entirely, but that wouldn’t happen overnight. While some tasks, like analyzing surveys and client feedback, can be already covered easily with general-purpose LLMs without additional training, other aspects are way more tricky.

The LLM-based AIs are not too good with math and thus, they are not that good at analyzing tons of numbers to come up with numerically-originated insights. That would require a dedicated ML-based AI that could ideally operate without training on the client’s data, but since we are talking about a “plug and play” approach, it needs to be able to find data insights out of the box. Of course, there are already analytical suits attempting this approach, for now at least I have not heard about general AI replacing data analysts.

Of course, if all that was to be done by an AI agent, there would be tons of technical speed bumps along the way (i.e., an automated and secure way for the agents to access all the data they need universally in any client’s tech stack), but it’s not researchers’ work to begin with anyway.

Still, if we wanted to have a full end-to-end agent coverage, we need to add a “developer” agent that would be able to implement A/B testing, heatmaps, create and email (or implement in UI) surveys. That is additional complexity on top of an already tall order, which is only increased exponentially given the variety of potential clients’ tech stack and security limitations.

So, an end-to-end approach might simply be too unrealistic, but generating the means to gather and then analyze the user research data feels almost inevitable, rather than simply possible.

If ChatGPT is any future indicator, a researcher will still need to be present at the end to sanity check the results (i.e., for hallucinations), but the amount of manual and intellectual labor needed will be significantly reduced.

Qualitative research

These include:

- Interviews

- Focus groups

- Field studies (contextual inquiry)

- Diary studies

- Ethnographic studies

- Think-aloud protocols

Here I’m a little less reluctant to call AI replacement imminent. Trust and openness are essential when it comes to that type of user research technique.

In a typical research scenario, an interviewer is forging an empathetic bond with participants by listening to their problems, frustrations, and joys. We humans have an uncanny ability to decipher subtle emotional cues, create a safe space for participants to open up, and pivot the conversation on the fly if we sense a user is holding back.

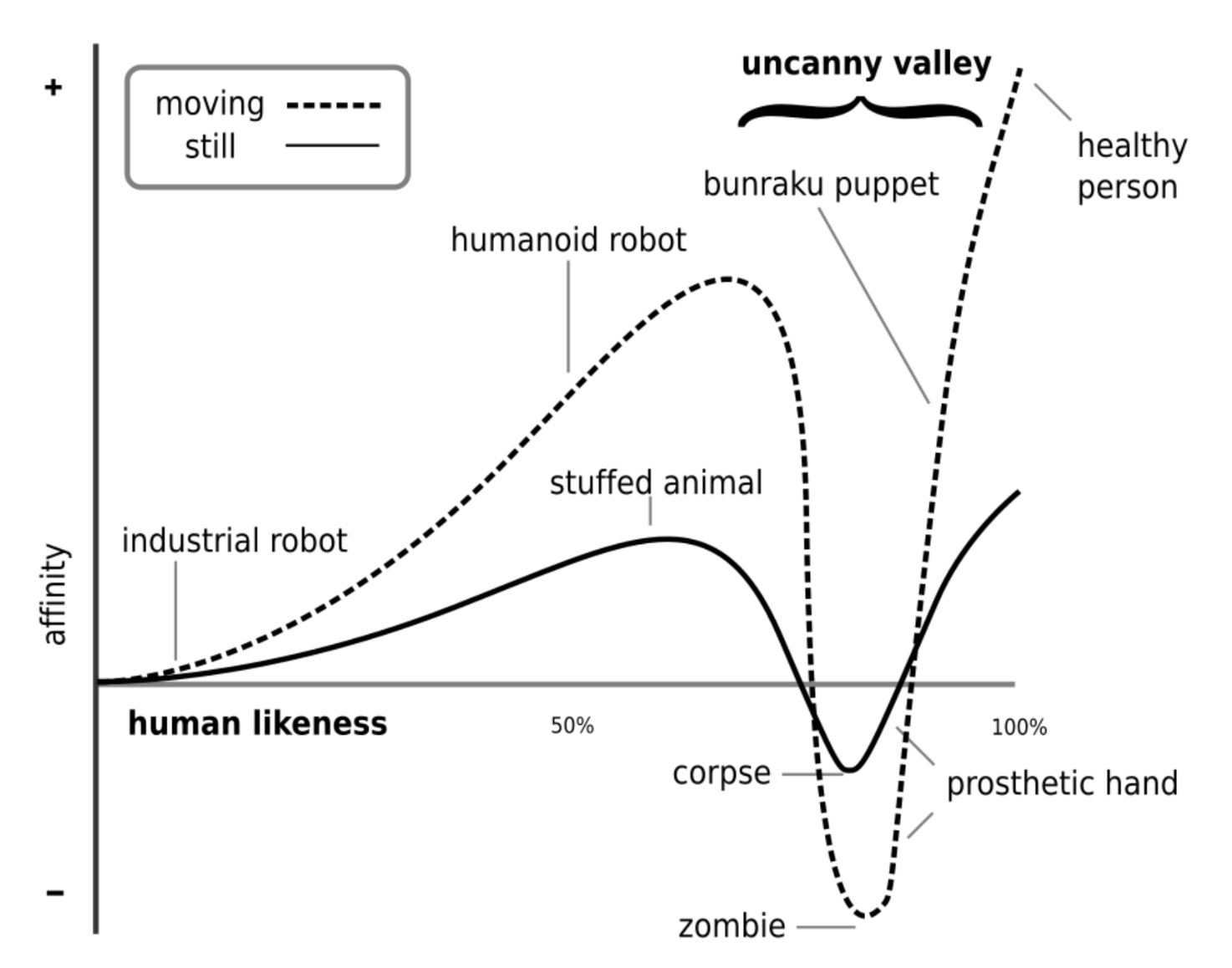

So far, AI has not been able to utilize the full spectrum of human empathy, even if certain chatbots are adept at mimicking it. Sure, advanced voice AIs can sometimes pass as humans for a few minutes. Still, there’s a certain “uncanny valley” that users sense.

It might be a too-consistent speaking style, put out an awkward laugh, or show an inability to handle tangential stories with genuine curiosity. Not to mention confident, non-sense replies when the audio quality is poor and AI can’t understand us clearly.

If you want to know what I mean, book a free call with a product called boardy.ai.

It’s a tool that will interview you based on your LinkedIn profile to find a good match for your business needs and recommend your services to others. Even though the voice is perfect and the whole conversation is organic, it’s so obviously clear quickly that you are not talking to a real human.

Another AI-agent-based example of software performing convincing calls is Synthflow.ai. Check out the video on their homepage to see (hear?) how well it performs.

There is however one aspect that AI interviewers can really surpass their living counterparts: the scale of operation.

As already mentioned, a human team is quite limited by its size, availability, and working hours. The AI agent version would only be held back by the server spending budget and the availability of people to speak.

In this scale, interviews that were of poor quality (due to whatever reasons) can simply be disregarded and only clear and meaningful conversations can be considered.

So, in theory, there could be 1000s of interviews happening every day, transforming this approach into a hybrid qualitative/quantitative method, as AI could easily extract trends from all of the calls taken. This is something that no human-operated user research team can provide.

Going deeper: UI and prototype user testing

I wouldn’t give the article complete justice if I stopped here, limiting it to gathering research using natural language, text, and data. People are visual creatures, and we will respond best to images and tangible prototypes.

Thus, if a successful AI agent is to be able to answer the sample question I presented earlier, it needs to generate UI propositions to run them by potential users. That could be even better with clickable prototypes to streamline and “de-humanize” the research process.

This already exists and works in many UI development SaaS. One such example is Uizard:

The implementation here is exactly what ChatGPT and Midjourney got us to expect: you explain the UI that needs to be created using a prompt, and the AI generates several proposals.

Now, combine that with the unlimited scale of AI agents, and you can have dozens if not hundreds of different UI proposals verified against a big enough audience. Thus, you could achieve the level of user-centric product polish that would not be possible in the current era.

Even if the Product Manager is dead set on perfection requiring years of meticulous tests, then pure common sense would make the team stop their search after a few weeks.

Summary

Going back to the original question, “Should User Research Be Left at the Mercy of AI?” I can only give the stereotypical Product Manager reply:

“It depends.”

It depends on the quality of the data being generated and received and the associated costs. I don’t believe we are ready for that step just yet, but we are in the early days of AI agents.

Who knows what will happen in the near future, but the brutal truth is that if the proposed here AI-based user research software can do the same (and more) work, then it is only logical to have it do so.

A more realistic take on what we observe in the AI market now is that more and more elements will become AI-driven, but there will be a researcher there to counteract obvious AI mistakes and compensate whenever hallucination takes over.

That is until AGI really emerges in an affordable and easily replicable way. In that case, if we still want to work, we should all just convert to goose farmers or something.

Perhaps it will ultimately lead to a better society where the pursuit of wealth is no longer the goal, and everything is available for free, provided by machines. The fan of Star Trek in me can only dream, but for now, let’s see what happens next.