In 2006, British mathematician Clive Humby made the infamous statement: “Data is the new oil.” This wasn’t just a catchy phrase; it was a powerful metaphor.

Like oil, raw data needs to be refined, processed and turned into something useful because its value lies in its potential. Unfortunately, most people have yet to understand what it truly means to use data.

Leveraging product analytics isn’t just about making pretty dashboards; it’s about viewing your existing data as a learning opportunity to make informed decisions with your onboarding strategy.

In this blog post, I’ll discuss the 7 most common analytics mistakes made by product managers and data analysts today and explain how you can overcome them with the right approach.

Try Userpilot Now

See Why 1,000+ Teams Choose Userpilot

Mistake #1: Tracking everything without clear objectives

One of the most common mistakes data analysts make is tracking every possible metric simply because they can. This can send them down a rabbit hole of numbers and spreadsheets, where they forget what they’re looking for.

However, teams that attempt to simplify by measuring only one or two key KPIs also risk missing critical patterns or early warning signs in their product’s performance.

Are Product Analytics Mistakes Costing You Revenue?

Collecting data is easy, but using it correctly is hard. Avoid common analytics mistakes to improve customer engagement and drive real product value. Take this quick check to see where you stand.

You see a sudden spike in feature usage. What is your immediate reaction?

How do you handle qualitative data (user feedback) alongside your metrics?

When you find a friction point in your analytics, how fast can you fix it?

Stop Guessing, Start Growing

Don’t let bad data habits stall your growth. Userpilot helps you avoid these mistakes by combining deep analytics with actionable, code-free engagement tools.

How to solve this issue?

Start with your core business goals and map out 3-5 key metrics that directly support these objectives. If you aim to improve user activation, focus on time to value, feature adoption rates, and the completion of key activation events.

The toughest challenge? Setting realistic targets. Your first goals won’t be perfect. Maybe you aim for 10% of users to adopt a feature within three months and realize it’s too ambitious. Each iteration gets you closer to the numbers that actually matter.

Dashboards provide a bird’s eye view, while specific reports can uncover deeper patterns. Identify your product’s core features and ensure everyone knows the ‘Aha!’ moment that makes users stick.

Mistake #2: Looking at aggregate data alone and not segmenting properly

Aggregate data gives you the big picture but can obscure critical patterns. By lumping all users together, product managers risk overlooking behaviors unique to specific groups, leading to misguided decisions and wasted effort.

Focusing solely on individual user-level data isn’t practical either. Without a framework to organize insights, you’ll drown in data and be unable to identify actionable trends that drive product improvements.

How to solve this issue?

The key is to segment your analytics based on your specific goal. Break down user behavior by journey stage, NPS scores, company size, account type, or usage frequency.

Vanity metrics, like page views, can’t tell you what specific users are actually doing. Segmentation helps you determine the root causes and act on the real behaviors of your most important users.

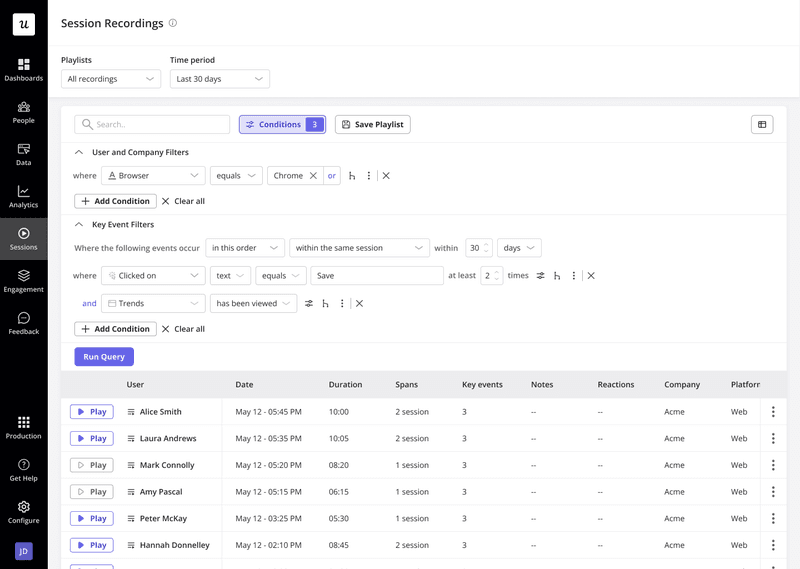

I’ve seen how segmenting by user persona can empower product and design teams to craft experiences tailored to distinct user needs. Plus, with session replays, you can watch how different segments navigate your product in real time.

This level of insight helps you uncover missing data and transforms decision-making processes.

Mistake #3: Choosing the wrong data visualization

Data visualizations are meant to make analytics easy to understand. The choice of visualization feels like a minor detail because, after all, the insights are already there.

However, a poorly chosen visualization can distort data interpretation, even if the numbers are accurate.

For instance, using a pie chart to track trends over time can mislead viewers by implying static proportions when the data is dynamic. This can lead to misinformed decisions based on an avoidable misunderstanding.

How to solve this issue?

Visualize data according to the story the data needs to tell.

For example, a line graph clearly shows growth trends to track monthly feature adoption, while a bar chart might better represent a breakdown of usage by customer segment.

Bad visualizations don’t just confuse; they undermine trust in the data. By choosing the right tools and being intentional with how metrics are displayed, product managers can unlock the full potential of their data without leaving room for costly misunderstandings.

Mistake #4: Misinterpreting correlation as causation

When two metrics rise or fall together, assuming a cause-and-effect relationship is tempting.

For example, a spike in user logins coinciding with a feature launch might seem to be evidence that the feature drove engagement. This assumption feels intuitive, making it an easy pitfall for even experienced teams.

However, that spike in logins could be due to other factors, like a seasonal trend or a marketing campaign.

Acting on unvalidated assumptions can lead to misguided product changes, like doubling down on a feature that wasn’t the real driver of user activity.

How to solve this issue?

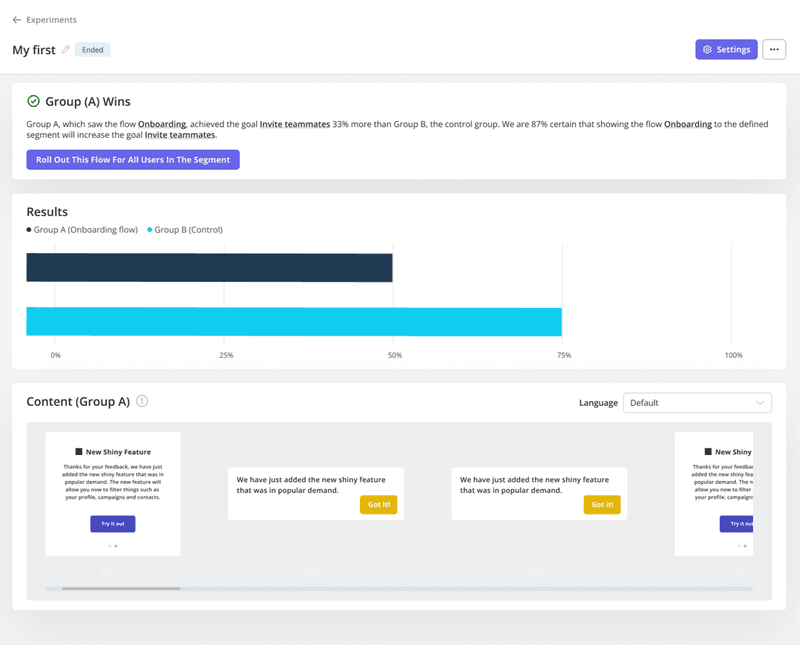

Validate causation through controlled experiments like A/B testing.

For instance, if you suspect a new onboarding feature improves user retention, test it by splitting users into two groups: one that experiences the new feature and one that doesn’t. Compare the retention rates to see if the feature genuinely impacts behavior.

Avoid mistakes like these by anchoring decisions in experiments rather than assumptions. It’s the difference between making confident changes and taking expensive shots in the dark.

Mistake #5: Not combining qualitative with quantitative data analysis

Numbers tell you what is happening, but they don’t explain why.

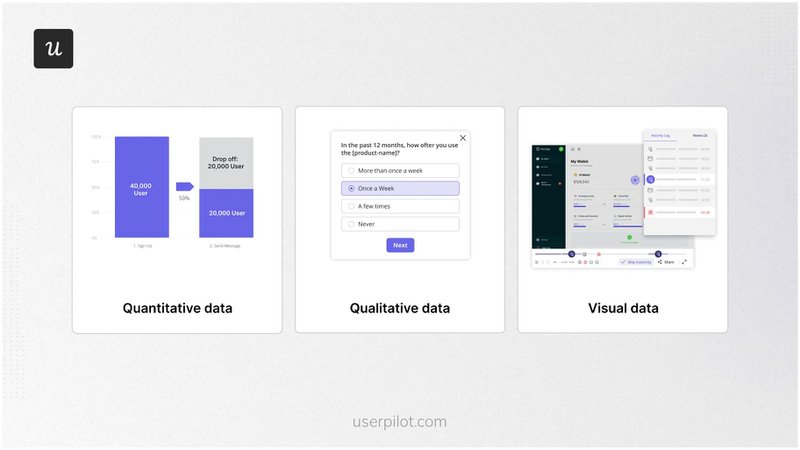

Quantitative data like feature adoption rates or time-to-value is critical, but relying solely on it can lead to missing the bigger picture of user behavior and experience.

For example, if users abandon an onboarding flow, the numbers can’t tell you if the issue is unclear instructions, a technical glitch, or unmet expectations.

Ignoring the qualitative side leads to incomplete insights. Feedback from surveys, interviews, or open-ended questions captures what users think and feel, providing the context to make informed decisions. Pairing this with visual analysis, like session replays, adds another layer of depth by accurately showing how users interact with your product.

How to solve this issue?

At Userpilot, we routinely combine these three data types. Quantitative metrics highlight patterns, while surveys allow us to follow up with questions that dig deeper. We conduct interviews for issues requiring more clarity to understand user challenges directly.

Our session replays revealed users struggling to connect Userpilot to their BI tools. A follow-up survey confirmed that button labels were confusing. This combination of data helped us prioritize a small but impactful UI change that improved everyone’s experience.

Integrating quantitative metrics with qualitative and visual insights ensures a comprehensive and actionable analysis. Listening to users and making them feel heard strengthens decisions and builds trust, creating a better product for all.

Mistake #6: Not acting on product analytics insights

Collecting data is the first step; the real value lies in turning that data into actionable improvements. Many teams gather raw metrics but fail to move beyond analysis, resulting in wasted potential.

For example, you might discover that a specific feature has low adoption, but nothing changes without a process for acting on that insight, and the issue persists.

How to solve this issue?

Teams need a systematic approach to convert insights into action.

Start by scheduling regular analytics review sessions to discuss key findings. Prioritize insights based on their potential impact on business goals, then create clear, actionable tasks.

For instance, if data shows a drop in activation rates, you could redesign the onboarding flow to address user confusion.

Without action, insights are just numbers. Building a clear review, prioritization, and follow-up process ensures your product evolves based on real user behavior.

Mistake #7: Not democratizing product analytics data

When product analytics data stays siloed within the product team, its impact is limited. Other departments, like customer success or marketing, miss out on insights that could inform their strategies.

For instance, if the product team knows which features drive the most engagement but doesn’t share this with marketing, campaigns may promote the wrong aspects of the product.

Sharing data across teams isn’t just about transparency. It’s about enabling everyone to make data-driven decisions. When analytics are accessible, the entire organization can make more aligned, informed business decisions.

Turn analytics to action with Userpilot

The seven analytics mistakes we mentioned could be costing you revenue without realizing it. Analyzing data effectively is critical in an industry with growing customer expectations and fierce competition.

Book a free demo with Userpilot to learn how you can avoid common mistakes and turn your analytics into actionable insights.

![What are Release Notes? Definition, Best Practices & Examples [+ Release Note Template] cover](https://blog-static.userpilot.com/blog/wp-content/uploads/2026/02/what-are-release-notes-definition-best-practices-examples-release-note-template_1b727da8d60969c39acdb09f617eb616_2000-1024x670.png)