‘If you’re searching for “survey examples,” you probably want to know:

- What do effective surveys from leading companies look like?

- What makes a customer feedback survey great?

- How to design surveys for maximum engagement?

Was my guess right? If yes, you’re in the right place.

Building a great survey requires two things: getting the types of survey questions right for your data needs and ensuring you have a library of good survey questions to pull from. But seeing those elements in action is where the real inspiration happens.

In this article, I’ll share 9 survey examples from successful SaaS companies, highlight the qualities of successful surveys, and show you how to design a good survey. I also answer some FAQs about SaaS surveys.

Keep on reading 👇🏼

9 Effective survey examples from leading companies

Here are 9 surveys for different purposes and stages of the user journey that top-notch SaaS companies use at different stages of the customer journey.

1. ClearCalcs welcome survey

The 5-question ClearCalcs welcome survey asks users about their role (e.g., engineer, architect, builder, etc.), jobs to be done, and primary objectives they hope to achieve.

Its purpose is to collect data necessary to personalize the onboarding experience by highlighting relevant features or resources for each user type.

Key takeaways from this example

- Onboarding starts with understanding roles: By identifying user roles early, ClearCalcs can customize onboarding flows for a more personalized experience, leading to reduced time to value and higher adoption rates.

- Simplicity wins: Each question is on a separate screen and the survey is visually appealing, with a clean layout and clear options, making it easy to complete.

2. Wordtune’s customer research survey

Wordtune uses this targeted survey to gather insights into the types of software its users use during work hours.

The survey aims to better understand users’ workflows and help prioritize product development initiatives, like new integrations, that add the most value to its users.

Key takeaways from this example

- Ask relevant and specific questions: The survey has a clear purpose and only asks questions aligned with it.

- Keep it sweet and short: The survey contains only one question. It takes only a few seconds to answer, increasing the likelihood that users will complete it.

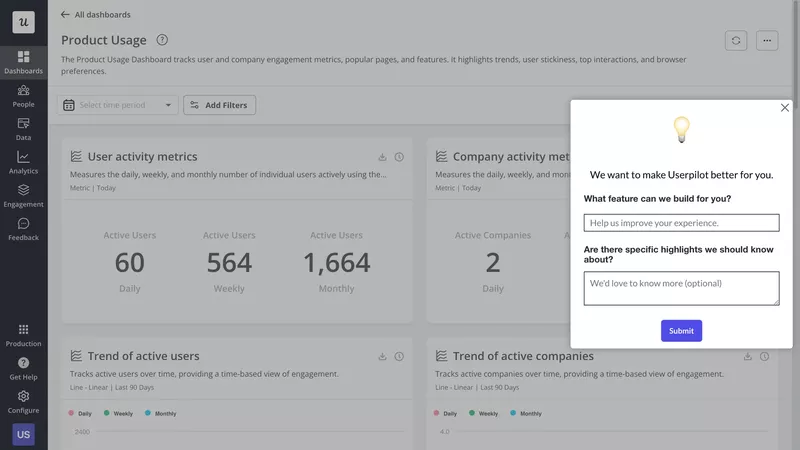

3. Userpilot’s feature request survey

Userpilot’s feature request survey, accessible through its resource center feedback widget, allows users to directly share their thoughts on what features they’d like to see next.

This survey gathers actionable insights from users to inform feature prioritization and ensure the product evolves based on real customer needs.

Key takeaways from this example

- In-app placement improves engagement: The feedback widget allows users to submit ideas at the most relevant moment—when users are actively engaging with the tool.

- On-demand feedback gives a more complete picture: By enabling users to submit requests whenever they need, you miss fewer valuable insights.

- Encourages open-ended feedback: By asking, “What feature can we build for you?” Userpilot doesn’t restrict the user’s options or ideas.

- Optional follow-ups reduce friction: If the user doesn’t want to answer it, they can skip it.

4. Wise net promoter score survey

Wise uses a clean, straightforward email-based NPS survey to measure customer loyalty.

The survey asks them how likely they are to recommend Wise to a friend on a scale of 0–10, which is quick to answer, making the feedback collection process frictionless.

Key takeaways from this example

- Adjust the survey channel to your product and user segments: Most users don’t use the Wise app daily, so by emailing the survey, you can reach more of them.

- Keep the survey simple to encourage participation: With just one question and a clean design, the survey facilitates high response rates.

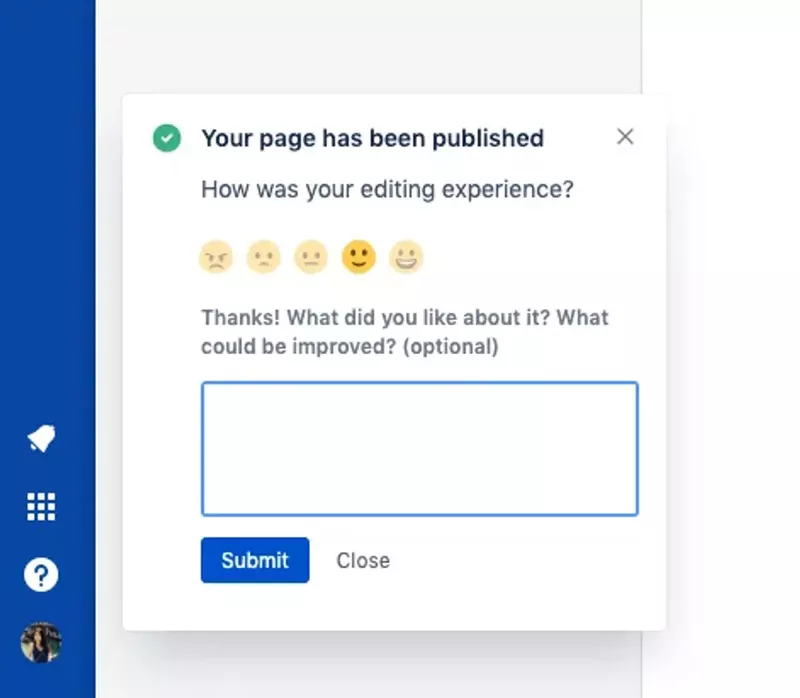

5. Jira’s customer effort score survey

Jira uses a contextual in-app CES survey to gather feedback immediately after a task is completed—in this case, publishing a page.

The survey asks, “How was your editing experience?”, with an emoji-based rating scale for quick responses and an optional follow-up for detailed feedback. Its purpose is to measure how easy it is for users to complete key actions and identify areas for improvement in the user experience.

Key takeaways from this example

- Contextual timing matters: Triggering the survey right after task completion captures fresh and accurate feedback.

- Visual simplicity boosts engagement: Emoji ratings make the survey quick and fun to fill out.

6. Canva’s product improvement survey

Canva’s product improvement survey allows users to provide open-ended feedback and attach screenshots or videos to clarify their input.

Its goal? To identify usability issues, gather feature requests, and understand user pain points in a structured yet flexible format.

Key takeaways from this example

- Multimedia support adds clarity: Allowing users to attach screenshots or videos ensures developers get precise insights into user challenges.

- Privacy assurance builds trust: Canva’s explicit note about handling sensitive information reassures users and encourages participation.

7. HubSpot’s customer satisfaction survey

HubSpot’s CSAT survey uses a simple 7-point rating scale to measure customer satisfaction after a specific interaction.

The question, “How satisfied were you with your experience today?” is straightforward and allows HubSpot to identify specific areas that need improvement.

Key takeaways from this example

- Context matters: Tying the survey to specific touchpoints ensures that feedback is relevant and actionable.

- Clear labels prevent misunderstandings: For some users, number 1 could mean the best while 7 – the worst, which could skew the results.

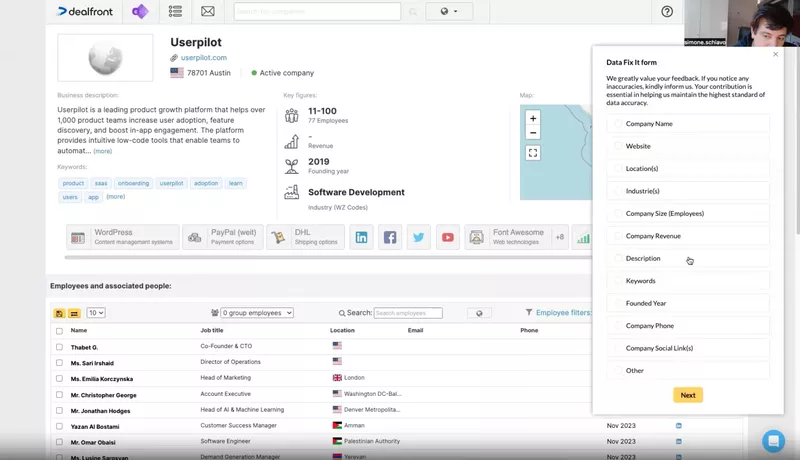

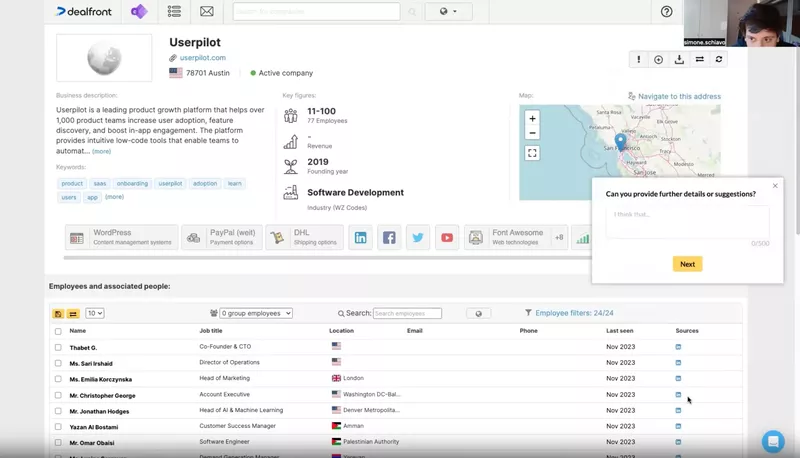

8. Dealfront’s error reporting survey

Dealfront uses an in-app error reporting form to allow users to highlight inaccuracies in company data.

The survey is triggered by an easily visible button in the top right corner of the screen and allows users to make corrections to fields like company name, website, or revenue. This improves the overall product value.

Key takeaways from this example

- Streamlined feedback flow: The form allows users to select specific fields to correct, keeping the process organized and efficient.

- Collaborative user engagement: By empowering users to report errors, Dealfront strengthens the relationship with their customers and improves data quality.

- Optional additional feedback: Users can share extra context or suggestions in a free-text field, making the feedback actionable.

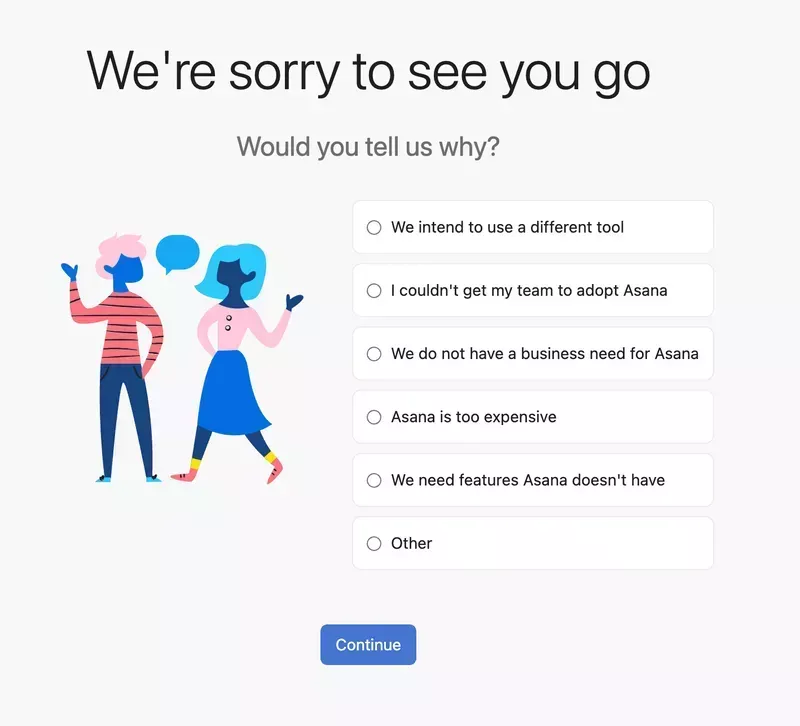

9. Asana’s churn survey

Asana uses a churn survey to understand why users cancel their subscriptions. The survey presents multiple-choice options such as “We intend to use a different tool” or “Asana is too expensive,” along with an “Other” option to capture unique responses.

Understanding the main reasons for customer churn can inform products, pricing, or support improvements. It also allows them to prevent the user from churning, for example, by offering a cheaper plan.

Key takeaways from this example

- Predefined options simplify responses: By offering common reasons for churn, Asana makes it easy for users to share feedback quickly.

- Open-ended option allows deeper insights: The “Other” field gives users space to provide additional context, enabling Asana to uncover reasons that might not have occurred to them.

- Retains a positive tone: Despite the negative context, the survey’s friendly design maintains an approachable feel.

What makes a good customer feedback survey?

Having seen the nine surveys above, you probably have a good idea of what makes a good survey. Let’s go over three key characteristics.

Includes concise and clear questions to gather the insights you need

The main reason why surveys fail to provide the insights you need?

Poorly written questions.

Make sure they are short, clear, and easy to understand for all users. This means avoiding local slang or jargon. Consider translating them for users who don’t speak your language as a native tongue.

Double-barreled questions like “How satisfied were you with our product and customer service?” are another sin. If the user is satisfied with the product but not the service, there’s no good answer. Break it down into “How satisfied were you with our product?” and “How satisfied were you with our customer service?”

Features a visually appealing and user-friendly design

Let’s face it: How the survey looks is as important as the questions it asks. A cluttered, outdated design can be a proper turn-off, and it will show in your response rates.

Here are a few ideas:

- If there are more than two questions, present them on separate screens.

- Add a progress bar to let respondents know how far they’ve come.

- If you collect customer feedback on a mobile app, make sure the questions are easy to read and answer on smaller screens.

Personalizes the questions for different customer segments to collect targeted feedback

One-size-fits-all surveys don’t work. If you want meaningful feedback, you must tailor your questions to fit different types of customers.

Think about it: A new user will have different insights to share from a power user. A user who has never used a particular feature won’t have anything valuable to share. You get the drift.

Start personalization by segmenting your target audience. For instance, based on their use case, JTBD, customer journey stage, previous survey responses, or in-app behavior.

Next, customize the questions for each group. For example:

- New users: “Why did you choose our product?”

- Loyal customers: “What’s the #1 reason you continue using our product?”

- Churned customers: “What’s the main reason you decided to leave?”

Ready to create your own surveys? Here’s how to do it

Ready to create your own surveys?

Let’s use what we learned from the nine examples and the best practices above to create your survey. Userpilot helps you turn these survey examples into reality with a library of 14+ pre-built templates. Instead of building from scratch, you can launch proven survey designs in minutes.

1. Decide on the right survey format for your goals

Before launching, decide if your goal requires a full-screen modal (like ClearCalcs) or a subtle slide-out (like Jira). Choosing the right format from the survey examples above ensures you capture feedback without disrupting the user flow.

So, before you start asking users questions, answer this one: “What’s your goal?” or, in other words, “What kind of feedback do you want to collect?” This determines your next steps.

For example, if you want to reduce churn, you may want to conduct a churn survey. Not only that, but a customer satisfaction survey or a product improvement survey can offer valuable insights, too.

2. Choose pre-designed survey templates to speed up feedback efforts

Once you know what survey you want to create, see if your feedback tool has the template.

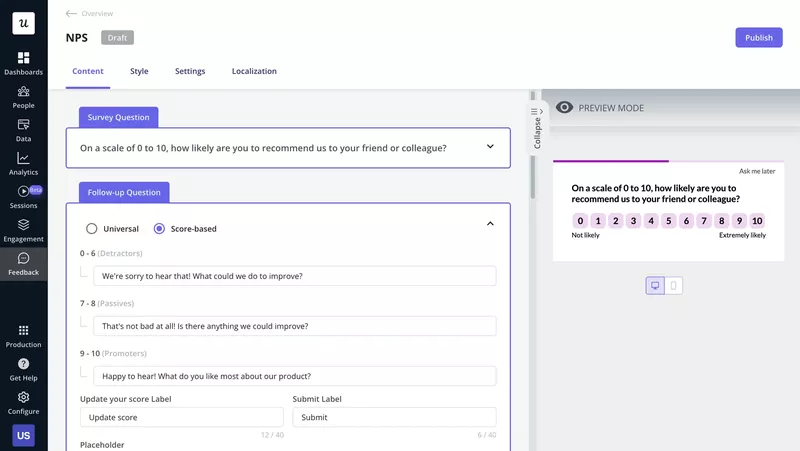

In the Userpilot library, you can find 14 different templates, including industry-standard surveys like NPS, CES, PMF, or CSAT. Thanks to these, creating the survey takes literally a few minutes.

Of course, the template won’t be perfect—not the first time you use it anyway. You may need to tweak its design to give it a more native-app look. Thanks to Userpilot’s visual editor, you can do it without writing a line of code.

Once it looks the way you want it, save it so you don’t have to go through the process whenever you want to run the survey.

3. Write the questions and create a logical flow between them

If you’re using the standard templates, you don’t have to worry about the questions. The surveys are already pre-populated.

However, you may still want to add extra questions, such as an open-ended follow-up question, to gather qualitative insights. Not a problem – it takes a few clicks.

What follow-up questions you ask may depend on the user’s answer to the main question. For example, you may ask your NPS detractors, “What could we do to improve your experience?” and your promoters—“What do you like most about our product?”.

For this to work, you need a tool with branching logic.

4. Trigger the surveys at the right time for the right people

Survey ready? Excellent!

Now, choose who gets it and when.

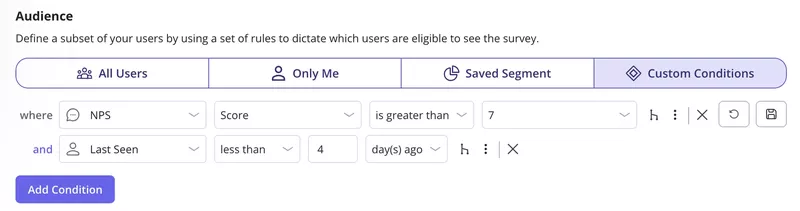

Look at the example below: we’re sending the survey to all NPS passives and promoters who used the app in the last 4 days. That’s how we’re targeting active, satisfied users.

For surveys like NPS, CSAT, or PMF, choose when you want the users to receive the survey. And set it to recur every 3-4 months.

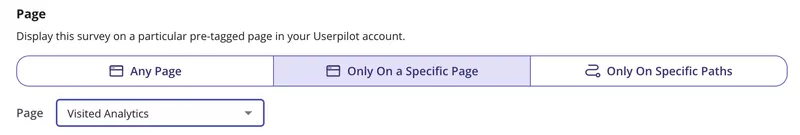

When measuring CES or new feature success, event-based triggering works better. That’s when the survey is triggered by a user action. In the example here, the surveys will be sent on a specific page to all users who Visited Analytics.

5. Analyze survey responses and prioritize them for future actions

Analyzing the surveys is the hardest part, in my opinion. I mean, analyzing quantitative data like CSAT or NPS is pretty easy, especially when your feedback software breaks down all responses and plots the trends in a graph (Userpilot does that).

It’s the open-ended responses that are a pain. But they’re also the most valuable.

Here’s how to go about analyzing them:

- Clean the data, for example, by removing duplicates or blank responses.

- Organize the responses by the sentiment.

- Group the responses by the features or aspects of the customer experience.

- Look for themes and patterns in the responses.

In Userpilot, you can do it by tagging the responses manually.

Conclusion

The above survey examples illustrate different use cases for surveys and best practices for administering them.

For your surveys to serve the purpose, they need to be relevant and timely and ask clear questions. Their visual design also matters: avoid cramming too many questions into one screen as this makes it overwhelming.

If you’d like to learn more about Userpilot’s survey features, book the demo!

FAQ

What makes a survey question effective?

A good survey question is clear and focuses on one idea. It avoids vague wording so people know exactly what they are being asked. When measuring satisfaction, being clear about what is being rated and how it is rated helps get more reliable answers.

What are the different types of survey questions to ask?

Survey questions come in various formats, each suited to specific goals.

Here are the most common types:

- Multiple-choice questions provide a set of predefined options for respondents to choose from. They are quick to answer and easy to analyze. For example: “Which of the following features do you use the most?”

- Likert scale questions measure attitudes or opinions on a scale, often ranging from “Strongly Disagree” to “Strongly Agree.” For example: “On a scale of 1 to 5, how satisfied are you with our product?”

- Rating scale questions use numeric values, such as 0-10, to quantify satisfaction or likelihood. For example: “How likely are you to recommend our product to a friend or colleague?”

- Open-ended questions allow respondents to provide detailed, unstructured answers. These are great for gathering qualitative insights. For example: “What could we do to improve your experience?”

- Alternative choice questions offer two answer options, such as Yes/No or Thumbs Up/Thumbs Down. These are quick and simple to complete. For example: “Did you find what you were looking for today?”

- Matrix questions: Combine related questions in a grid format to simplify responses. For example: “Rate the following features on a scale of 1 to 5.”

What are some common survey question examples?

Here are 20 examples of survey questions tailored to different purposes:

- How satisfied are you with your recent purchase?

- How likely are you to recommend our service to a friend or colleague?

- What features do you value most in our product?

- How easy was it to complete your transaction with us?

- Did you find what you were looking for on our website today?

- How would you rate the professionalism of our customer service team?

- What was the highlight of the event for you?

- What is your primary occupation?

- Do you feel your contributions are valued at work?

- What words come to mind when you think of our brand?

- Which other brands do you currently use for similar products?

- What features would you like to see added to our product?

- How clear were the instructions during the onboarding process?

- What is the main reason for canceling your subscription?

- Where did you first hear about us?

- Do you feel our pricing is fair compared to competitors?

- How disappointed would you be if you could no longer use our product?

- Which social media platform do you follow us on?

- How would you rate the usefulness of the training session?

- What is the primary reason you continue using our service?