When I first started running product research, I’d spend hours reading interview transcripts without finding clear patterns. I’d get pages of feedback, but turning it into something useful took forever.

Over time, I realized the real challenge is the qualitative data analysis process itself. Manual coding of qualitative data using formal analysis and thematic techniques slowed me down far more than the discovery itself. And at scale, that process just breaks.

I needed to move faster without losing the nuance that makes qualitative research valuable. That’s when I started using AI qualitative data analysis tools as my research assistant to shift how the work gets done.

In this article, I’ll walk through:

- How I use AI in my research projects.

- Where AI saves me the most time.

- Where human expertise still leads.

Let’s get started!

What’s the biggest challenge in your current qualitative data analysis process?

How much qualitative data are you analyzing each month?

What’s your primary goal with improving your analysis?

You’re ready to unlock deeper insights, faster.

Based on your answers, an AI qualitative data analysis tool can help you automate the manual grunt work, so you can focus on strategy and impact. See how Userpilot can transform your process.

Try Userpilot Now

See Why 1,000+ Teams Choose Userpilot

What AI brings to qualitative data analysis

Qualitative data explains what quantitative research can’t, like people’s motivations, frustrations, and expectations. The problem is volume. Interviews, surveys, open-text responses, etc., they pile up fast. That’s where AI earns its place.

I use large language models to jumpstart the analysis instead of sifting through everything manually. They help me:

- Clean and structure the raw text.

- Spot recurring language.

- Draft early themes.

- Surface sentiment and emotional tone.

- Highlight patterns I should dig into.

And it’s not just anecdotal. In an academic research titled Exploring the Use of Artificial Intelligence for Qualitative Data Analysis (2023), Morgan re-analyzed two datasets with ChatGPT and found ChatGPT generated accurate, clear, and descriptive themes quickly but missed more interpretive nuance.

The conclusion is that it’s indeed useful for triage and structure through widely varied methods, but not a substitute for analysis. That’s exactly how I use natural language processing techniques to speed up the front half so I can spend time on the parts only a human researcher should do.

Here’s how it helps in practice:

1. Cuts down the manual grunt work. Transcriptions, summaries, and first-pass theme grouping get done in minutes instead of hours. That frees me up to interpret, not just process.

2. Gives me a structured starting point. I don’t hand over judgment to the model, but I do use it to generate draft codes and categories. It speeds up the framework-building phase so I can focus on nuance and exceptions.

3. Surfaces tone and broad patterns. AI quickly flags positive, negative, and neutral sentiment and clusters related feedback. That gives me a scoped overview before I dive deeper.

My integrated AI qualitative data analysis process

I don’t treat AI as a separate step in my research workflow; it’s built into how I move from raw feedback to insight.

Over time, I’ve shaped a process that keeps me in control of interpretation while using AI coding to compress the messier, manual parts of analysis. So here’s how:

Collecting comprehensive feedback

Before I ever open an AI tool, I need good raw material. No amount of modeling, qualitative coding, or thematic analysis can fix shallow or poorly scoped feedback, and this is the part AI will never replace.

I start by defining why I’m doing feedback data collection and who I want to hear from. Am I trying to understand friction in onboarding? Validate a new feature? Surface unmet needs from power users? That intent shapes everything: the timing, the questions, the audience, and the format.

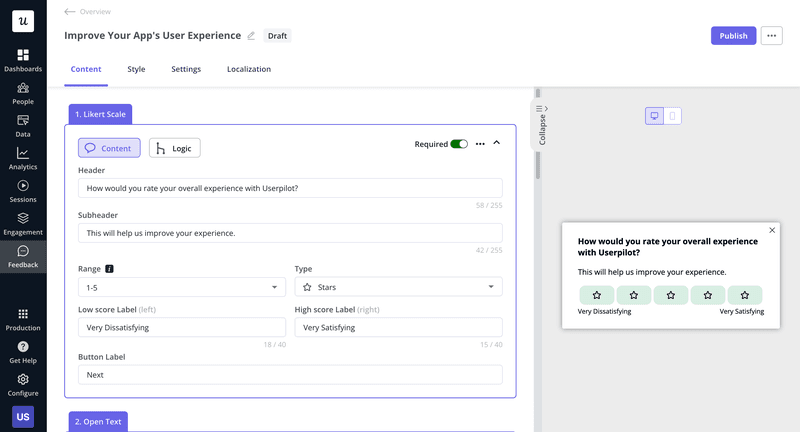

Usually, you’ll need a qualitative data analysis software for this. My advantage is that I run this entire process inside Userpilot. Our survey builder helps me:

- Drop a one-question microsurvey on the exact event (completed or abandoned) via a no-code and intuitive interface.

- Target only the segments that matter (plan, feature usage, lifecycle stage).

- Auto-localize using AI so I can deliver surveys in users’ own language.

- Pull responses with rich context (recent actions, device, company) into a single view.

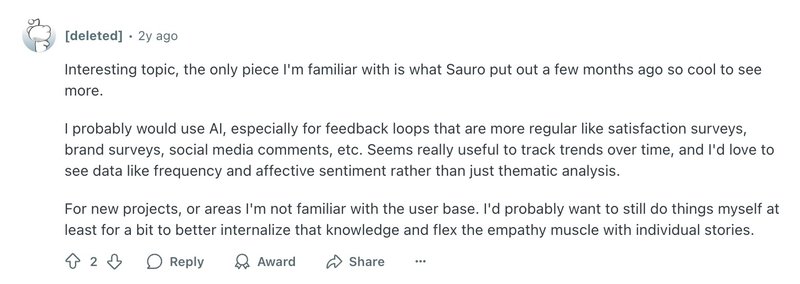

One thing I see echoed in the UX community is this tension: People want AI to help with scale and support qualitative data analysis, but they don’t want to lose the connection to real users.

Someone has put it well: AI is great for recurring feedback loops, but when you’re exploring a new area or an unfamiliar user base, you still need to do it manually to actually build empathy.

That’s why I treat this first step as the non-negotiable human layer. I decide the purpose, I define the audience, and I choose the moments. AI can only elevate insight if the foundation is intentional.

Analyzing and segmenting user insights

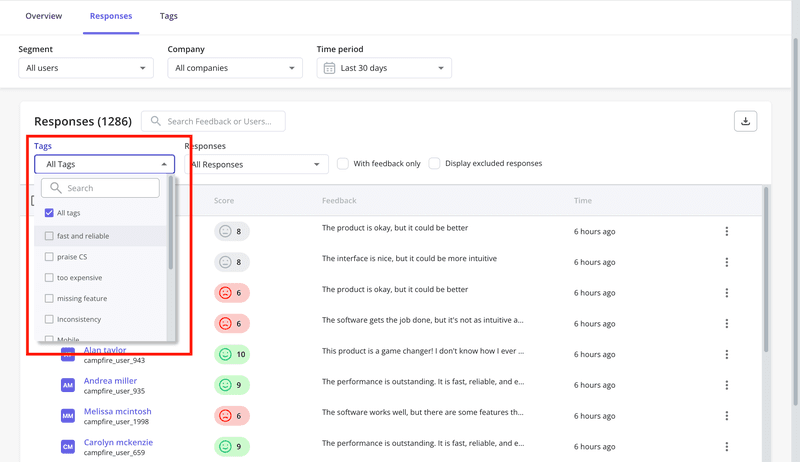

Once I have the responses, AI becomes essential to analyze data efficiently. With hundreds of responses, AI is great at flagging big themes and recurring phrases.

Until now, Userpilot has helped teams uncover insights from qualitative data through a manual tagging system. Our NPS tags made it easier to organize data and group NPS responses into themes, but performing sentiment analysis, prioritizing issues, or spotting trends still required significant manual effort. That’s about to change.

We’re launching AI-powered survey insights that will summarize responses and flag user sentiment automatically. Instead of reading every response, you’ll see the big picture:

- Which issues are recurring.

- How feedback varies across different segments.

Some worry that AI summaries miss important details. I understand the concern. The thing is, as long as you don’t let AI make final decisions, but rather use it to get accurate brief summaries, you don’t lose nuance.

Without hours of manual inductive and deductive coding, you can spend your time on reviewing, validating, and refining strategies.

Crafting targeted in-app experiences

The ultimate goal of qualitative analysis is to improve the product experience.

Once I have a clear understanding of user needs and pain points, I can easily create targeted in-app communication. When creating new onboarding flows, feature announcements, or help content (e.g., modals, tooltips, banners), I turn to Userpilot’s AI assistant.

You can simply type a prompt, like “write a friendly welcome message for new users”, and the artificial intelligence generates the copy. This saves me hours, enabling rapid iteration without sacrificing tone or clarity. I’ll often prompt it to “make it shorter” for a tooltip or “make it more detailed” for an interactive walkthrough. This helps keep product messaging consistent and context-aware.

And this is just the beginning.

Soon, Userpilot will go beyond AI-generated text. You’ll be able to generate entire in-app flows with a prompt. Messaging, targeting, triggers, and more without starting from scratch. Combined with AI-powered localization, this will unlock a faster, more scalable way to ship personalized experiences across segments and regions.

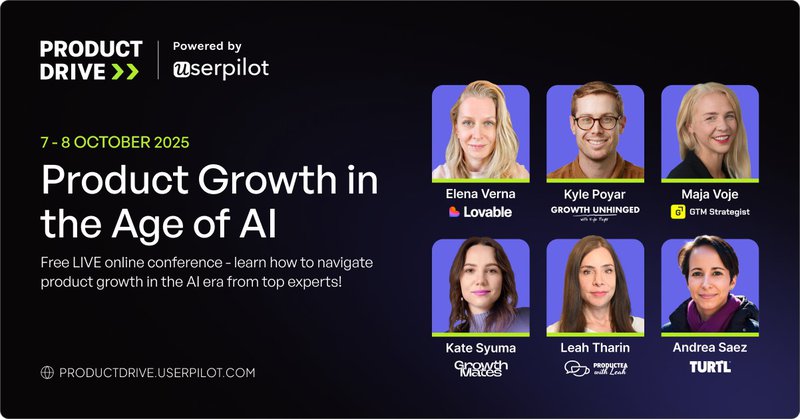

If you want a first look at what’s coming, I highly recommend joining our founder, Yazan, at Product Drive. His session will walk through exactly how this works and why it’s going to change how we think about in-app communication. I’ve seen an early preview, and let’s just say, you won’t want to miss it.

Are there any limitations of AI for qualitative analysis?

Absolutely. Ignoring them risks undermining the entire process.

AI speeds things up, but it comes with real limitations. Beyond hallucinations and transparency, there’s a steep learning curve to using these tools effectively. In this section, I’ll walk through the core limitations I’ve encountered with natural language processing and artificial intelligence models.

Hallucinations and fabrication happen

I want to be honest that generative AI tools can and will confidently make things up. That’s called hallucination.

I’ve seen this firsthand. In one early experiment, I asked ChatGPT to analyze a transcript and identify recurring themes. It returned a list that looked solid on the surface until I asked it to back up the themes with quotes. That’s when it fell apart. Some quotes were loosely paraphrased; others were completely fabricated.

Other qualitative researchers see this too. AI confidently quotes things that don’t exist in your product or market research datasets. This isn’t a bug. It’s a fundamental limitation of how large language models work.

This matters because artificial intelligence’s biggest strength is producing fluent, structured responses at speed. It’s also its biggest risk. The output sounds persuasive even when it’s wrong.

My rule: always verify AI findings against the actual responses. I use AI to move faster, but I still rely on human analysis for verifying quotes and context.

Transparency and confidence are missing

One of the things that’s always bothered me about using AI qualitative data analysis is how opaque the process can feel. Data security and privacy remain key concerns when uploading sensitive feedback to AI platforms.

When an AI model gives me a theme or summary, I have no idea how confident it is or what specific inputs it used to reach that conclusion. Unlike some APIs that return confidence scores or show multiple possible interpretations, most AI tools built on OpenAI give you a single answer with no explanation. That’s a problem, especially when you’re dealing with complex tasks like discourse analysis or coding qualitative data.

It’s not just me. In one Reddit thread, a qualitative researcher nailed the issue: “Designers need the algorithm’s decisions to be more transparent regarding confidence and reasoning.” I’ve felt that data privacy gap too, especially when AI-generated summaries sound polished but give me no signal about how reliable they are.

A study published in CHI found that a lack of transparency in artificial intelligence systems directly impacts user trust, particularly when users rely on those systems for open-ended, interpretive work like qualitative research.

So I treat AI-assisted analysis outputs as draft inputs. They’re starting points I interrogate, not truths I blindly accept. If I don’t know why the AI gave me a specific summary, I won’t rely on it to make product calls.

Userpilot’s AI Insights addresses this by showing its work. When it flags an anomaly or suggests a segment, you can click through to see the underlying data, the correlation strength, and which user behaviors triggered the insight.

Loss of immersion and learning

If there’s one part of the qualitative process I never outsource to AI, it’s the deep dive.

There’s no substitute for going through raw feedback yourself. That’s how I pick up on what users actually mean, not just what they say. Skimming summaries doesn’t teach me how they think. But reading their full responses, tracing their phrasing, seeing the context behind their frustration? That’s where the actual insight lives.

This isn’t just a personal take. Other qualitative researchers have agreed upon this, too. In one Reddit thread, someone summed it up clearly: “Diving into the data yourself is how you familiarize yourself with the user group—the whole purpose of the task.” They compared it to learning a new language. Like, AI tools might help with translation, but they can’t do the learning for you.

Another commenter described this as “front-loading the data.” The act of sitting with responses and making connections in your head. Artificial intelligence might speed up output, but it skips that internal process entirely. You don’t build empathy from bullet points.

So while I’ll use AI qualitative data analysis for repetitive feedback loops, like summarizing NPS comments. I keep my approach to every project different, even when exploring the same project from another angle or conducting further research.

When I’m working with a user group I don’t know well, I want to dig into the textual data as a human analyst. That’s how I internalize their reality, build intuition, and build a deeper understanding.

Drive better results with hybrid AI-human analysis!

AI speeds up the process, not the strategy. That’s still your job.

AI finds patterns and summarizes feedback, but it can’t replace your judgment. Understanding nuance, asking the right follow-up questions, and making strategic decisions still require human insight. Pairing AI with advanced analytical techniques allows teams to dig deeper into qualitative insights than manual methods alone.

Want a front-row seat to how top teams are navigating product growth in the AI era? Join us at Product Drive to hear from product leaders like Elena Verna, Kyle Poyar, Andrea Saez, and more as they unpack real strategies and share what’s next in product.

Book a demo with Userpilot today to see how AI can scale your qualitative analysis while keeping you in the driver’s seat.