AI UX Design: How to Build Smarter, More Intuitive SaaS Products

UX used to mean static interfaces and interactions that rarely changed once shipped. But AI UX design is changing how teams approach both the process of creating experiences and the products themselves.

You can now use AI to generate wireframes, test accessibility early in your design process, or even design adaptive interfaces that adjust based on user behavior.

In this article, I’ll share the best practices I’ve learned from working with AI tools and designing AI-powered interfaces. I’ll also cover the most useful tools available today and a few near-term trends I believe every SaaS designer should start preparing for.

What is AI UX design?

AI UX design involves using artificial intelligence tools and best practices to enhance the UX design process and/or create smarter, AI-powered product experiences.

Let’s look at both use cases:

AI as a tool in the UX process

According to Maze’s 2025 report, adoption of AI in product work jumped 14 points year over year, with 58% of product professionals using AI in 2025 compared to 44% in 2024.

So, where is AI finding the most traction for UX teams?

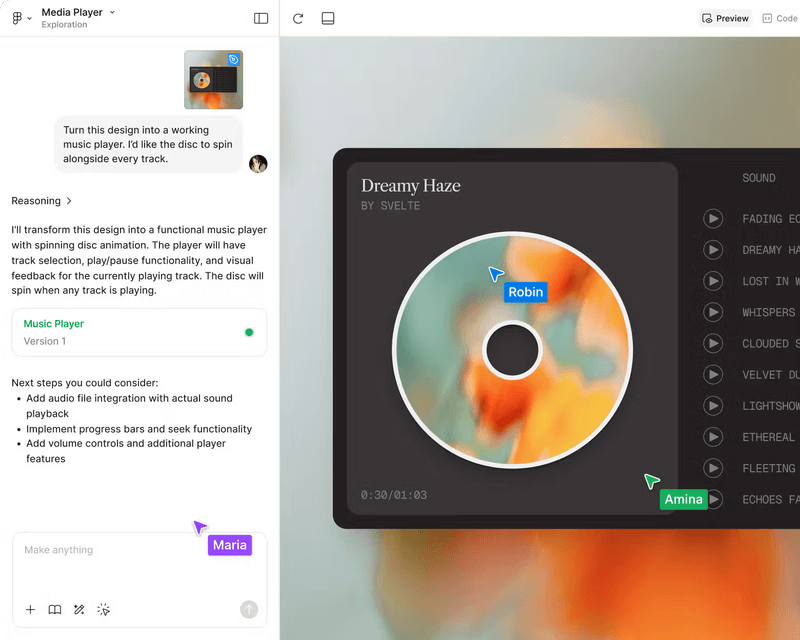

- Rapid wireframing: In the past, designers needed to spend hours sketching ideas on paper or pushing pixels in Figma just to get to a first draft. But now, tools like Uizard and Stitch can generate low-fidelity prototypes and even spark new design ideas from a simple text prompt.

- User journey mapping: Teams can use AI plugins like FigJam’s AI assistant to generate user journeys, analyze flows, highlight likely drop-off spots, and even suggest alternative paths.

- AI-generated UX copy: UXTools’ 2024 research shows that 75% of AI usage among design teams is focused on text-based tasks. That’s not surprising since so much of UX work depends on the words users see. Tools like ChatGPT, Writer, and Jasper are now integral to everyday workflows, enabling teams to generate interface copy, onboarding flows, or microcopy for error states in seconds.

- Accessibility testing: AI can flag color contrast issues, missing alt text, or complex sentence structures that make interfaces less inclusive. This shift alone can mean the difference between a smooth rollout and a painful set of redesigns.

- Personalized user testing scripts and surveys: Instead of writing the same usability test prompts manually, AI improves relevance by tailoring scripts based on target personas and scenarios.

AI as part of the product experience

76% of the companies that SaaS Capital interviewed for its 2025 report said they’ve integrated AI into their customer-facing products. In another report, 83% of product leaders expressed concern that their competitor’s AI strategy would outpace theirs.

The bottom line? SaaS companies are embracing AI to improve the end users’ experience and drive better engagement. Here are the most common use cases I found:

- Interfaces for AI tools: Companies are developing AI-powered chatbots, recommendation engines, and copilots to help users find answers more quickly, discover relevant content, and take action without requiring human support.

- New interaction patterns: AI is transforming how users interact with products, shifting from clicks and taps to natural language, predictive UIs, and even multimodal inputs.

- Trust, explainability, feedback loops: Many SaaS products are starting to add context cues to explain AI decisions. For example, rather than just serving a recommendation in a resource center, the product might display a note like, “We suggested this article because you recently searched for onboarding analytics.” These small touches help users feel more confident in the system’s output.

- Error states, hallucinations, and recoverability: AI systems don’t always get it right, so leading products are building in recovery paths. This often takes the form of retry buttons in in-app assistants, or editable AI-generated drafts that prevent users from getting stuck with a result that doesn’t fit.

My best practices for designing AI-powered UX

After working with AI in real-world product contexts, I’ve found that the most effective approach is to treat it as a creative partner. It can accelerate ideation and testing within the design process, but should never replace the human element at the core of good UX.

Here are six practices I lean on to keep that balance:

1. Scope what the AI can and can’t do

One of the biggest mistakes I see with AI products is the lack of boundaries. If users don’t know what the system is capable of, they’ll expect too much and lose trust when it inevitably falls short.

As a designer, it’s your job to make those boundaries clear. For example, if your tool is built to summarize support tickets, show that upfront rather than letting users assume it can handle any kind of content.

It also helps to add disclaimers like “This tool may make mistakes” or prompts such as “Try asking me about X, not Y.” These small cues will guide user behavior and set realistic expectations without undermining the product’s value.

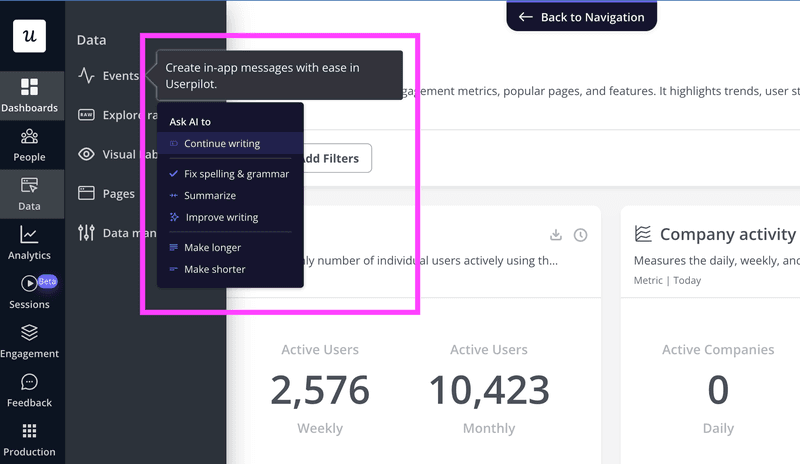

💡Userpilot tip:

Always reinforce boundaries at the microcopy level. For example, our AI writing assistant is limited to in-app messages, and the interface highlights this upfront.

2. Design for error states

As mentioned earlier, AI systems won’t always deliver a user’s intended outcome. So, when designing, think beyond the happy path and map out failure modes just as carefully.

That means asking questions like: What happens if the AI can’t return a result? How do we signal uncertainty without confusing the user? Where should a fallback path exist? What happens if a user misuses the tool?

Visily is a good example here. Its AI output canvas is so large that users can easily scroll into a blank area and mistakenly think the system has failed. To prevent that moment of doubt, the platform inserts a clear “Back to content” button that instantly returns you to your generated designs.

3. Build feedback loops

Feedback loops serve two purposes at once: they notify users that the system received their input, and they help the AI improve over time.

There are many ways to do this in an AI interface, but to keep things simple and engaging, I aim for at least one of these three:

- Lightweight loops: Display quick signals that indicate the tool has received and is processing the user’s input. Once the output is ready, offer simple ways to react, like a thumbs up/down, a short “Was this helpful?” prompt, or a “Show another” button.

- Editable output: Let users tweak what the AI produced instead of starting over. For text-based systems, offer inline editing with quick options like shorter, friendlier, or add a CTA. If it’s a voice interface, allow follow-ups such as “make it more formal” or “summarize in three points.”

- Progressive refinement: Instead of forcing users to accept or reject a single output, allow them to refine results step by step. For example, after generating a mockup, the system could offer options like “try another layout” or “swap imagery.” For copy, it might suggest “make it shorter” or “adjust the tone.”

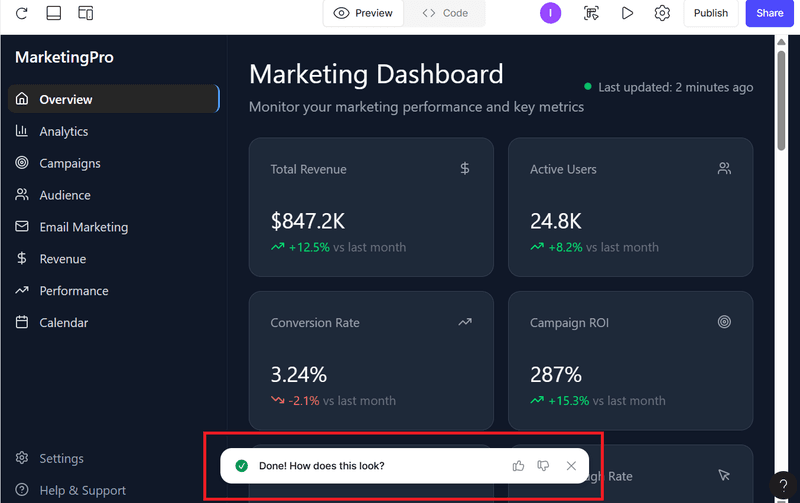

For example, Figma’s AI tool prompts users with a “How does this look?” message and lets them respond with a thumbs up or down. If they dislike the result, the AI makes it easy to regenerate or edit parts of the output.

4. Explain the “why” behind AI

Have you ever used an AI-powered tool and suddenly got an output that left you wondering, “Why did it give me this?” or worse, “Can I even trust this?”

No matter how intuitive an AI tool is, users still appreciate some level of transparency because it reassures them that the system isn’t just guessing.

This doesn’t mean overwhelming people with technical detail. Instead, give them simple cues that explain the logic. For instance, if an AI generates a dashboard insight, it could say, “Based on the last 30 days of usage data.”

Other trust markers I’ve seen work well in SaaS products include confidence scores, source labels, and brief context notes like “derived from survey responses” or “pulled from your analytics settings.” These small details give users the context they need to believe the output and keep trusting the system over time.

5. Empower users: Preferences, digests, and opt-ins

AI features are powerful, but they can quickly become overwhelming if users feel they have no control. The best safeguard is to design choice into the experience.

For example, this could mean giving users the option to decide how and when they receive notifications, offering digests instead of constant pings, or allowing them to choose between channels like email, Slack, or in-app alerts. Even simple toggles or opt-in flows can transform the experience from “AI is interrupting me” to “AI is working for me.”

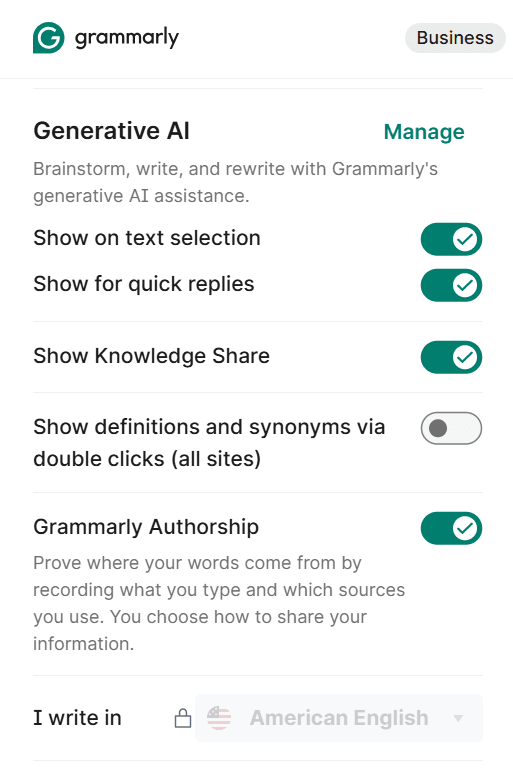

Grammarly does this well. Its generative AI features improve text as you type, but the options are flexible. From the Chrome extension, you can toggle on or off features like quick replies, knowledge sharing, or text selection, and only keep the ones that fit your workflow.

6. Design for inclusion

Another important point is recognizing that experience personalization doesn’t automatically equal inclusivity. A recommendation system that works brilliantly for one audience can unintentionally alienate another if the logic isn’t transparent or if the defaults are too narrow.

How do you design for inclusivity? I’ve found that it boils down to two key elements: clarity and choice. Users should be able to see how their experience is shaped, opt out of personalization if they want to, and interact in a language or format that feels natural to them.

That is why I always emphasize building with localization and plain-language defaults in mind, rather than relying only on machine translation. It may seem like a small shift, but it’s often the difference between a product that works for some people and a product that works for everyone.

💡Userpilot tip:

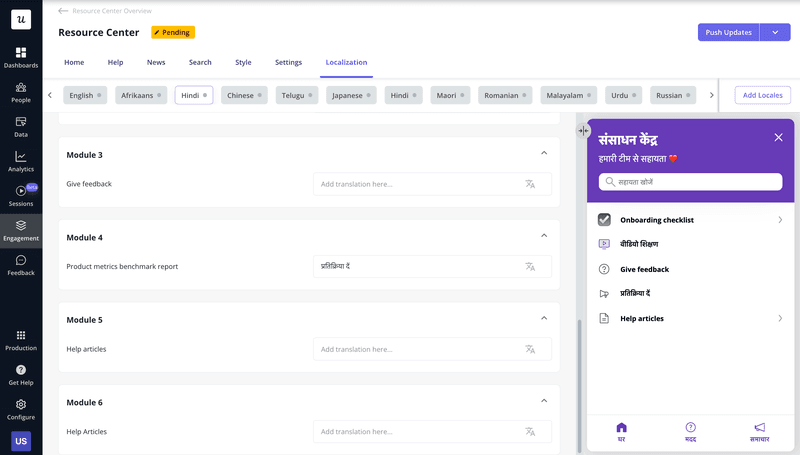

Scaling to global audiences requires tools that reduce the effort for product teams while maintaining a clear and inclusive user experience. Userpilot’s AI-powered localization feature supports this by making it simple to adapt in-app experiences across multiple languages without sacrificing clarity:

4 AI tools to try for UX design

A growing number of UX tools and UI generation platforms now make it easier to integrate AI into everyday design work. Here are four worth exploring:

1. Figma AI

Best for: Speeding up small design tasks directly inside Figma.

Pricing: Included in Figma’s free plan with limited AI credits. Paid plans start at $20/seat per month.

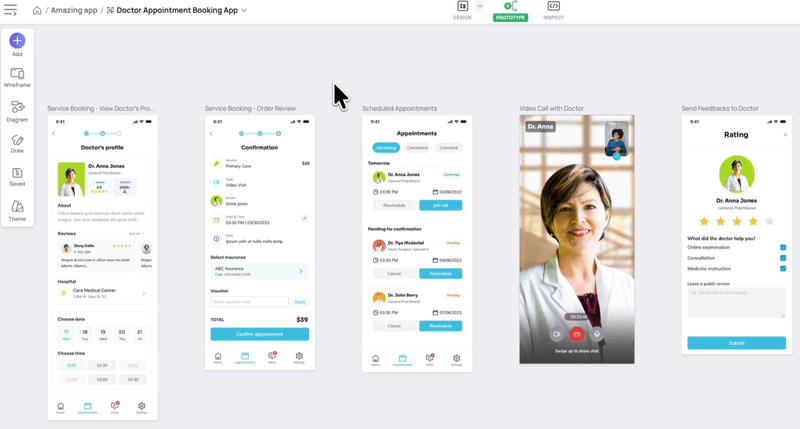

2. Uizard

Best for: Turning text prompts or sketches into wireframes and mockups, then refining them into high-fidelity designs with minimal effort.

Pricing: Free tier available, with paid plans starting at $12 per month.

Generating wireframes in Uizard. Source.

3. Visily

Best for: Collaborative design with AI-powered templates and instant design adjustments.

Pricing: Free to start. Paid plans start at $14/editor per month.

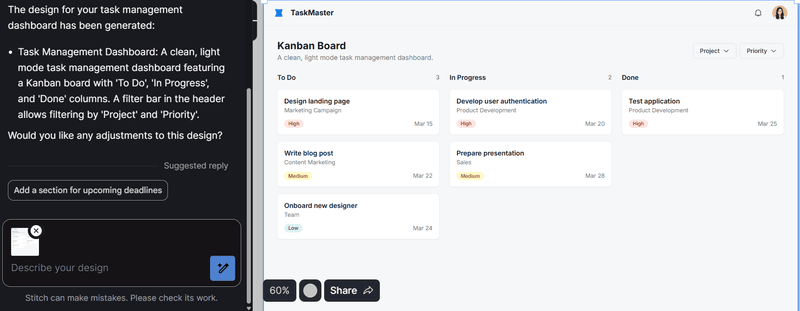

4. Stitch

Best for: Creating polished UI designs directly from natural language descriptions.

Pricing: Currently in Beta stage and free for all users.

These four tools show how AI is reshaping the way teams design today. Userpilot is built on the same principle of making advanced capabilities accessible without disrupting existing workflows.

From automated localization to survey analytics and AI-powered content generation, our platform continues to evolve with SaaS needs and provide AI functionality directly inside the product without requiring teams to leave their workspace.

The future of AI UX design

AI and machine learning have numerous possibilities that will benefit UX professionals, but three shifts stand out to me:

1. Emotionally responsive UX

Early AI systems are beginning to interpret tone, sentiment, and even biometric signals.

A 2024 Nature Electronics study showed that an AI-assisted “electronic skin” could classify stressors with 98% accuracy and quantify everyday psychological stress responses with up to 98.7% confidence. This type of technology could go mainstream within months or just a few years, giving designers the ability to create interfaces that respond dynamically to user emotion.

I see a challenge on the horizon, though. With something this powerful, product teams must take intentional steps to ensure responsible use. Without proper checks, emotionally aware systems can drift into manipulative personalization or intrusive emotion tracking.

2. AI-led experimentation and testing

Traditional design testing has been a slow and manual process. UX professionals set up variants, wait for enough data, and then analyze results, often weeks later.

AI will soon improve this workflow by automatically suggesting tests, running them in real time, and optimizing experiences based on the results.

Userpilot is building a Product Growth Agent to make this a reality inside SaaS products. The Growth Agent is designed to serve as an on-demand research partner for product teams. Once launched, it will be able to do things like:

- Proactively highlight opportunities for optimization rather than waiting for teams to ask.

- Provide objective data to support or challenge product and UX decisions.

- Forecast the potential impact of design changes before rollout.

- Track the ROI of product initiatives automatically so teams know what is working and what is not.

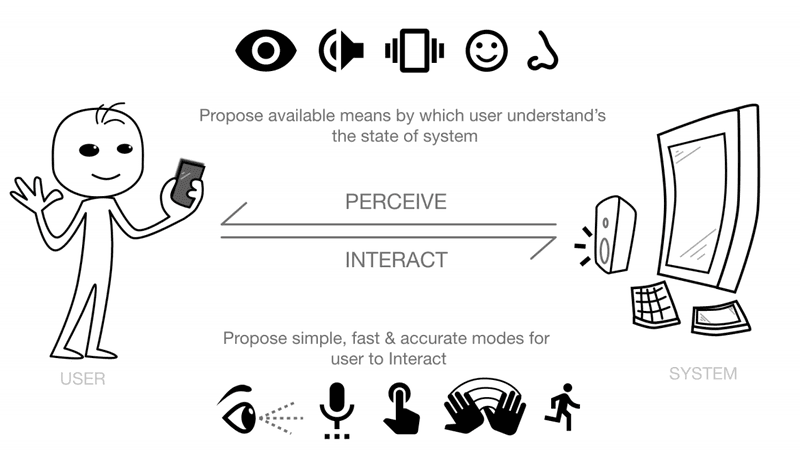

3. Voice-first and multimodal UX

Another trend I see gaining momentum is the shift into voice, gesture, and image input.

These multimodal interactions are quickly moving toward the mainstream, and users will expect to switch naturally between modes. For example, someone might ask a voice assistant for insights, use gestures to explore a 3D dashboard, and then type a quick edit to fine-tune the result.

This illustration perfectly captures the idea:

The main challenge is maintaining consistency when users switch modes mid-task. A voice query that flows into a visual dashboard should feel seamless, not like moving between entirely separate tools. Any friction at these handoff points risks breaking user trust and flow.

Accessibility is just as critical. Multimodal design should not assume every user has the same abilities or preferences. When adopting this technology, add features such as captions for voice output, tactile feedback for gestures, and visual confirmations for image inputs.

Make AI work for your users, not against them

AI is shaping how users interact with products and how teams make decisions. But as powerful as it is, artificial intelligence is not a replacement for thoughtful design. The real challenge and opportunity is to make AI serve people in ways that are transparent, inclusive, and grounded in proven UX concepts.

A big part of achieving this is understanding your users and continually refining your UX design process based on their behaviors. Userpilot can help here. Our platform equips you to automatically track user interactions, collect feedback at key journey stages, and deploy experience improvements code-free. Book a demo to begin today!

FAQ

Can AI replace UX designers?

No. AI can speed up the UX process by handling tasks like content generation or wireframing, but it can’t replace the human creativity and strategic thinking that make UX design effective.

What software do most UX designers use?

Most UX designers rely on tools like Figma, Sketch, and Adobe XD for design, alongside user research and collaboration tools such as Maze, FigJam, and Miro.

Designers also explore other AI tools that specialize in tasks such as automated wireframing, accessibility checks, and usability testing.

What is the difference between UI and UX?

UI (User Interface) focuses on the look and feel of a product, while UX (User Experience) covers the end-to-end journey, usability, accessibility, and emotional impact of the product. Put simply, UI is what users see, while UX is how users feel when using it.