![15 Best Customer Satisfaction Survey Examples [+Questions to Copy] cover](https://blog-static.userpilot.com/blog/wp-content/uploads/2023/11/15-best-customer-satisfaction-survey-examples-plus-questions-to-copy_9aafbca1c130a9ad6b92b61c0a7dd90b_2000-450x295.png)

If you’ve sent customer satisfaction surveys but struggled to get meaningful responses, you’re not alone.

Generic or poorly designed surveys often fail to capture your customers’ interest, leaving you with little to no actionable insights.

When done right, these surveys will provide you with the data needed to delight your users, reduce churn, and build long-term loyalty.

In this guide, I’ve handpicked 15 customer satisfaction survey examples from top companies to help you create your surveys more effectively.

You’ll also learn the fundamentals of survey design, explore different types of surveys, and discover best practices to help you collect actionable feedback.

What’s your main goal with customer satisfaction surveys?

Try Userpilot Now

See Why 1,000+ Teams Choose Userpilot

15 customer satisfaction survey examples from leading companies

Let’s take a look at customer satisfaction survey examples from leading companies in action. Below, I have also explained how the survey is implemented and highlighted what makes it effective.

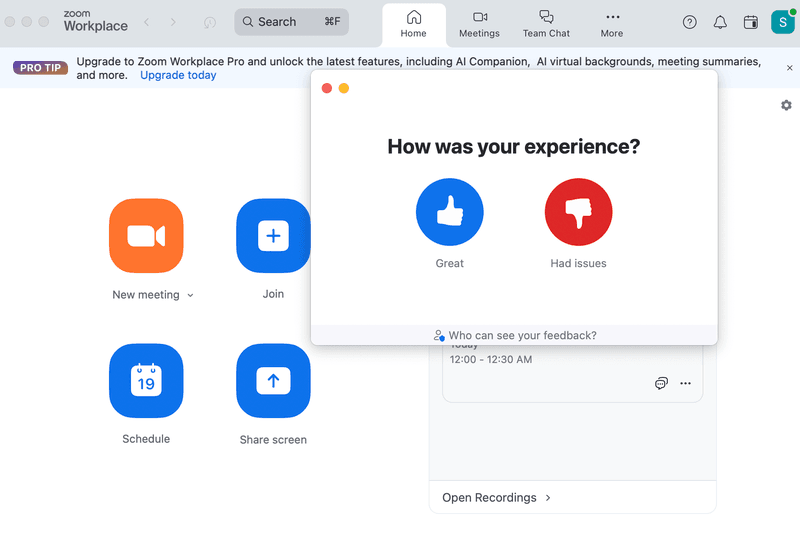

1. Zoom’s simple customer experience survey

Zoom’s customer experience survey is an excellent example of user-centered design. After a meeting, users are presented with a simple question: “How was your experience?”

Instead of overwhelming the user with multiple options, it keeps things minimal with just two choices—a thumbs-up for a great experience and a thumbs-down for issues.

This binary feedback method allows users to respond quickly without much thought or effort. The simplicity makes it more likely that users will engage with the survey.

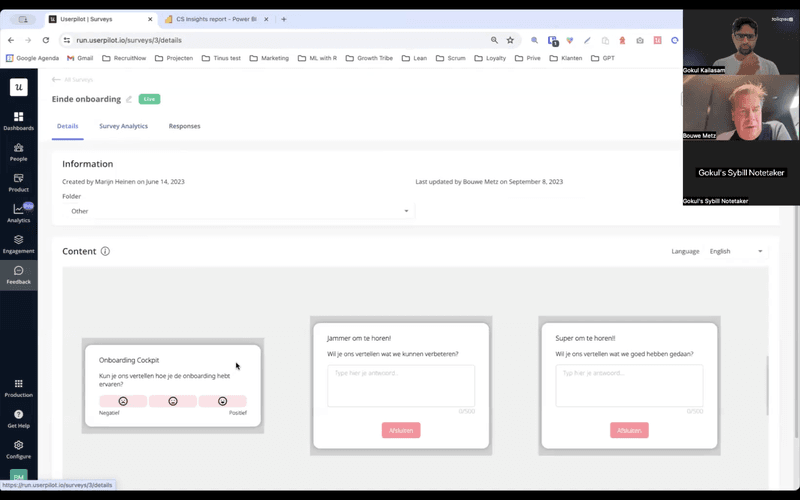

2. RecruitNow’s onboarding satisfaction survey

RecruitNow triggers a simple satisfaction survey, asking users to rate their onboarding experience at the end of the flow. This well-timed survey captures feedback while the experience is still fresh, helping RecruitNow assess the effectiveness of their onboarding and make improvements where needed.

In addition to onboarding surveys, RecruitNow conducts customer satisfaction (CSAT) surveys every six months using Userpilot. These in-app surveys allow them to collect valuable insights into user satisfaction, identify opportunities for product improvements, and enhance the overall experience.

Though the six month survey cadence provides useful insights, I believe implementing more frequent surveys—such as quarterly—could help them spot trends and act on opportunities faster.

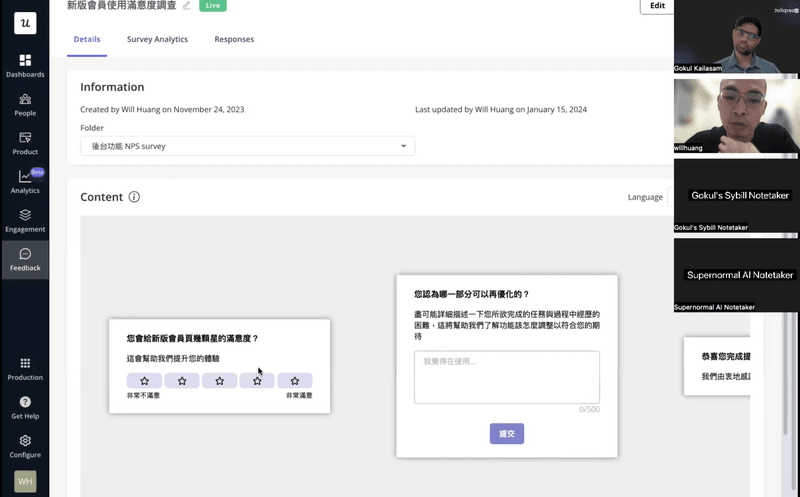

3. CYBERBIZ’s product redesign satisfaction survey

CYBERBIZ uses Userpilot’s in-app surveys to collect customer feedback and measure the success of their product redesign.

To gather a comprehensive view, CYBERBIZ used both an ordinal scale and an open-ended survey question. The latter encouraged users to share detailed feedback in their own words, providing deeper insights into their experiences and uncovering specific areas for improvement.

The results significantly improved feature adoption, reduced support tickets, and enabled more informed decisions based on unified customer feedback. This approach highlights how contextually triggered surveys can drive impactful changes and enhance user satisfaction.

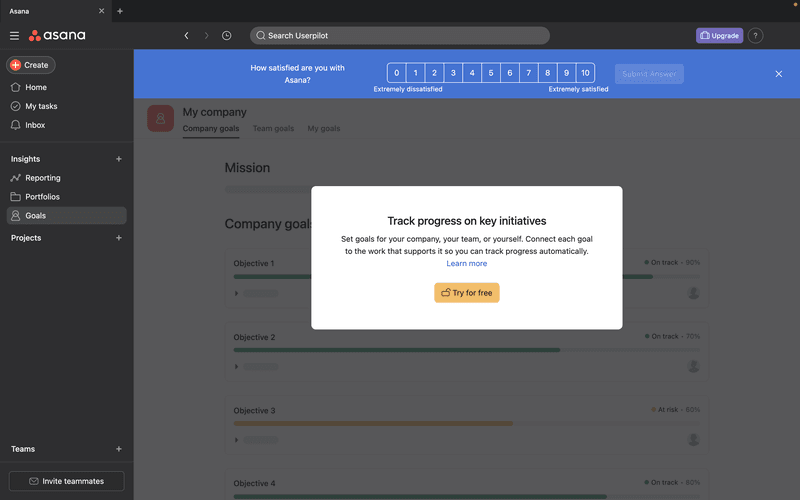

4. Asana’s non-intrusive survey

Asana triggers an in-app survey to gauge customer satisfaction. Instead of using a modal that disrupts the user’s flow, they opt for a sleek notification banner.

The banner is strategically placed at the top of the page, ensuring it doesn’t interfere with the user’s workflow. The subtle design is not overwhelming nor confusing and gets straight to asking what is needed.

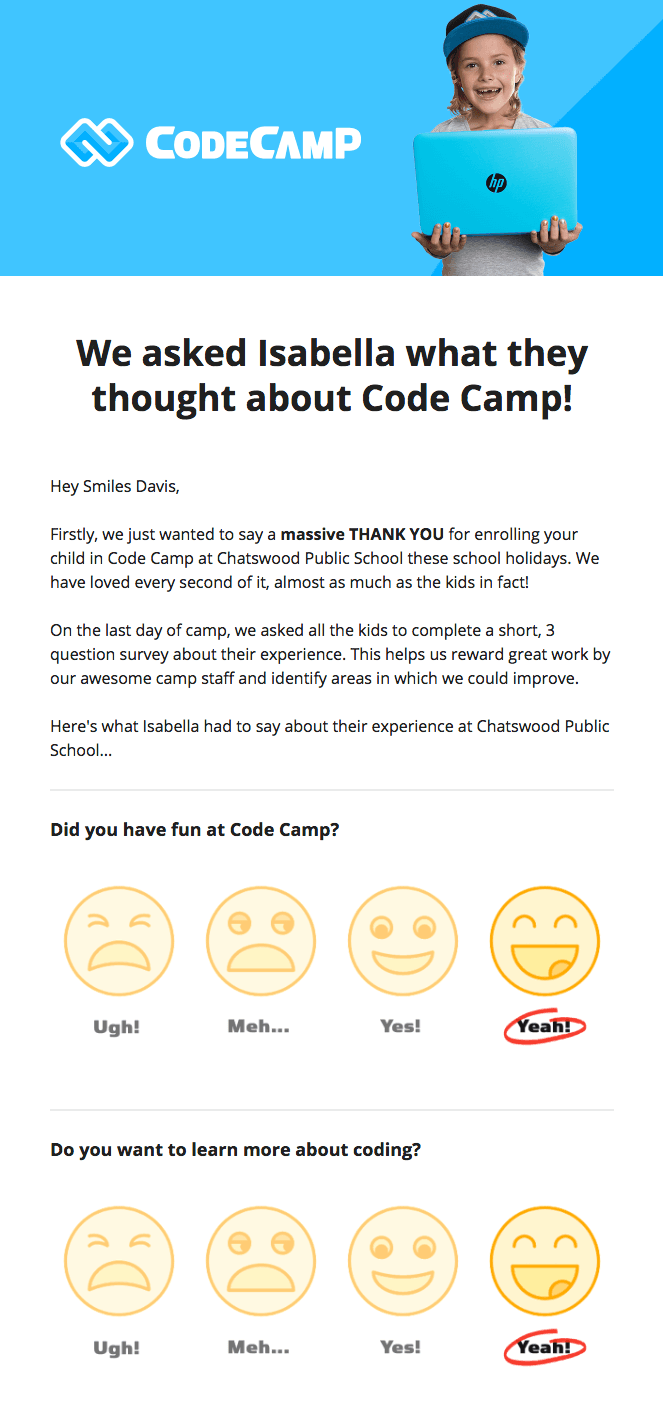

5. Code Camp’s emoji-based survey

Code Camp takes a unique approach by sending parents a personalized email that shows the CSAT survey results filled in by their child. The email addresses the user by name and clearly explains the purpose of the survey.

The survey itself is simple and engaging, using a series of emojis to represent answers. These are color-coded in yellow, which stands out against the white background.

6. Userpilot’s general product feedback survey

Userpilot’s general product feedback survey is designed to gather customer satisfaction insights.

It uses a simple question, paired with emojis, to make the survey intuitive and user-friendly. It also features an open-ended question for users to provide qualitative feedback, offering deeper insights into their experience and potential areas for improvement.

The best part about this survey is that it is available on demand. Whenever a user wants to provide feedback, they can go to the resource center and click on “Give feedback on Userpilot.”

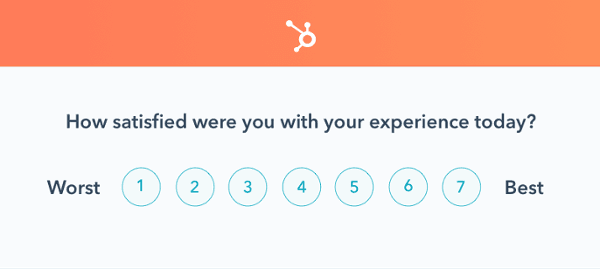

7. HubSpot’s customer satisfaction score survey

HubSpot sends customers this simple survey after important customer interactions. They ask customers to rate their experience on a 1–7 point scale, from worst to best.

What’s impressive about HubSpot’s customer feedback survey is its simple yet impressive nature. The question asked is direct and easily understood by anyone.

The survey is subtle and not obstructive. It comes in a smaller modal design, which works well to grab the user’s attention, without covering the entire screen. Also, HubSpot’s clear branding is reflected in the survey.

The downside to this survey is a lack of qualitative data. This makes it difficult to collect extra details on what exactly the customer liked or hated.

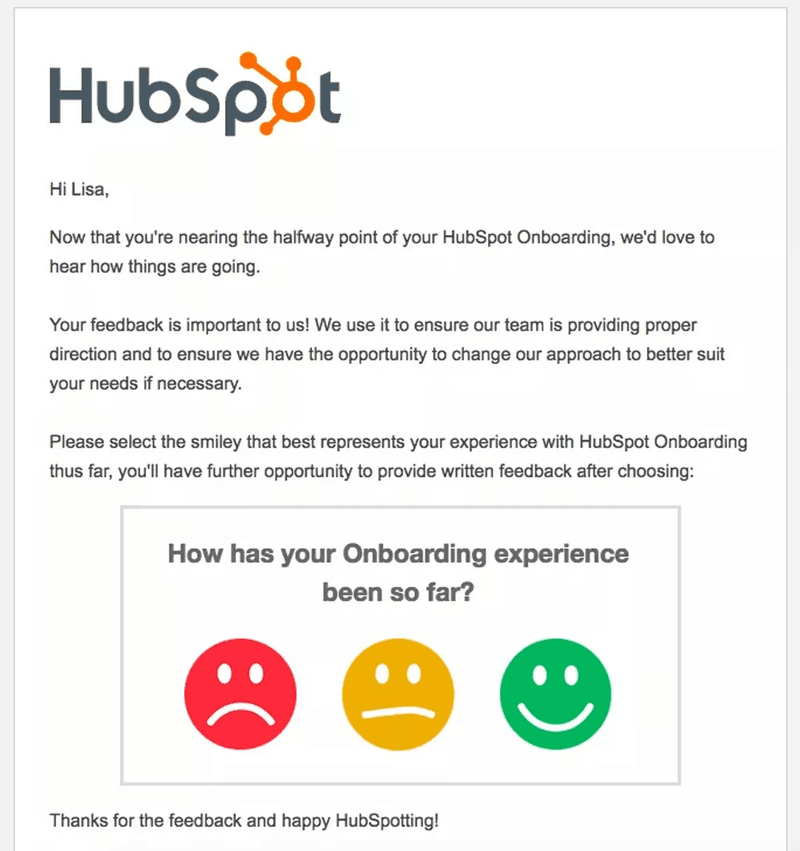

8. HubSpot’s mid-onboarding check survey

HubSpot understands that onboarding is critical to determining whether the user will become a customer or not. So, instead of playing guessing games, they measure the satisfaction of customers with the onboarding program.

Only this time, the survey is not triggered in-app but sent via email. In the email, the user is addressed by name and informed about what’s going on.

Then, HubSpot includes a simple survey and asks users to choose their answer from a series of emojis with the help of a color code from red to green for clarity.

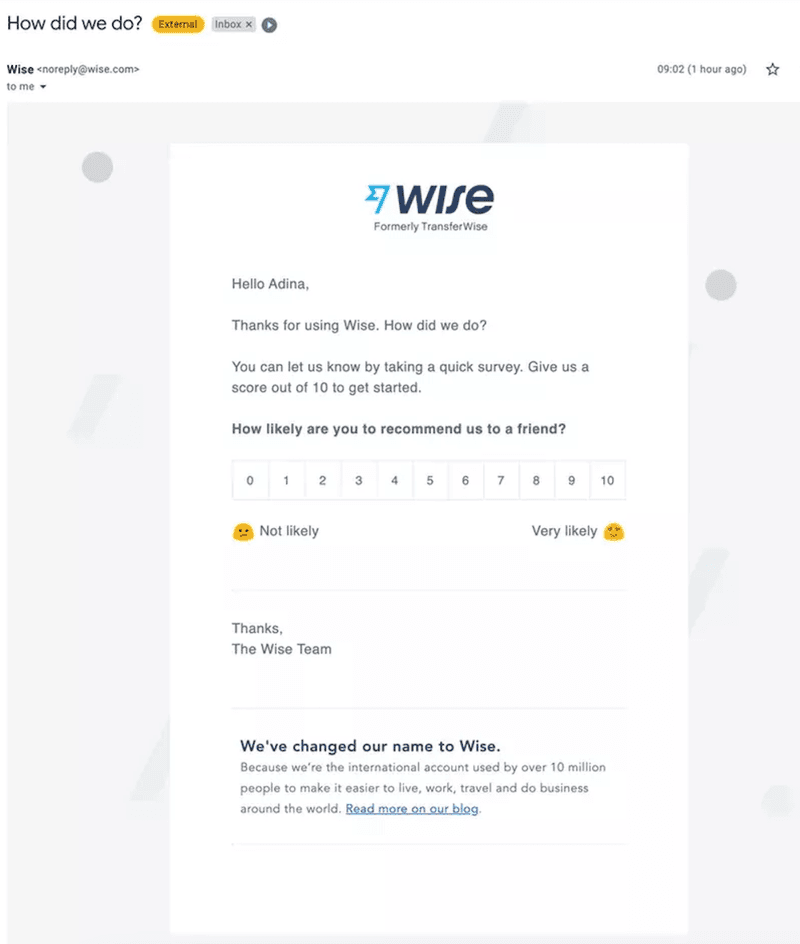

9. Wise’s transactional NPS survey

After a user has made a payment with Wise, the company sends a transactional survey via email. This is a good example of how to collect customer feedback in real time, as it comes right after the user performs the action when the memory is still fresh in their mind.

The survey poses a simple question, answered on a 10-point scale, which is too demanding for most customers. To explain what each number means, Wise combines both words and emojis as a visual and text-based approach for better cognitive function.

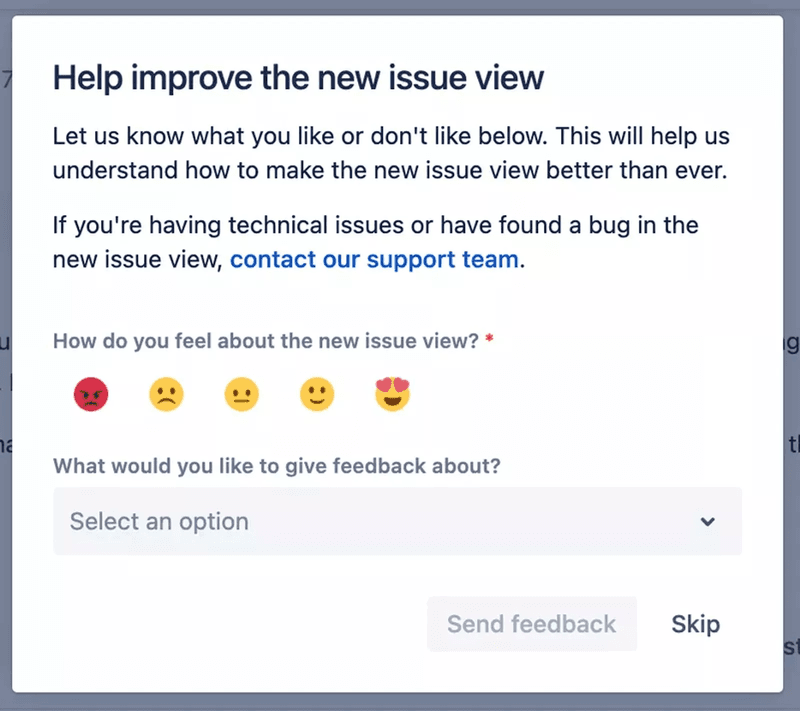

10. Jira’s customer satisfaction survey regarding a new issue

Jira sends a quick survey to understand how satisfied customers are with a new feature. This survey pops up after the user has engaged with the feature for a few minutes.

Customers who need an immediate response are referred to the customer support team with a single click, while customers who wish to leave a review can continue filling out the feedback form.

The survey question is presented in the hopes of capturing likely issues that might have come up when interacting with the new feature. Users can rate their satisfaction with emojis, and if they wish, they can give more detailed feedback.

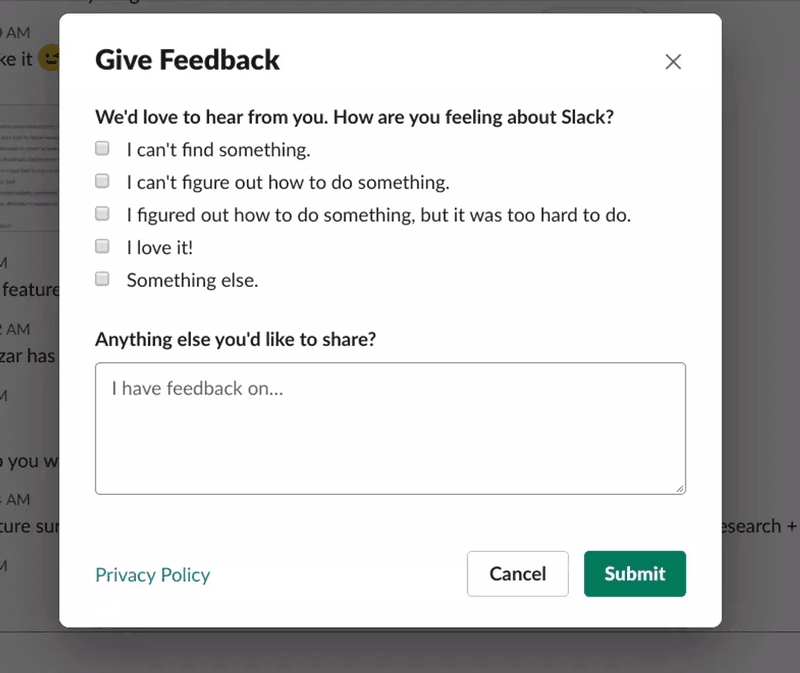

11. Slack’s overall customer satisfaction survey example

Slack programs a generic customer satisfaction survey to trigger at random intervals. This could be after the user has spent a certain period of time on the app, when they complete an action, or when they use an advanced feature.

Slack substitutes the number scale for multiple choice answers that are more direct and sound human. The options provided by Slack make it easy for them to figure out if the user is having a UI problem, an experience problem, or a navigational issue.

This is followed by a simple question that lets users share any ideas, opinions, or frustrations that are secondary and not included in the multiple-choice question.

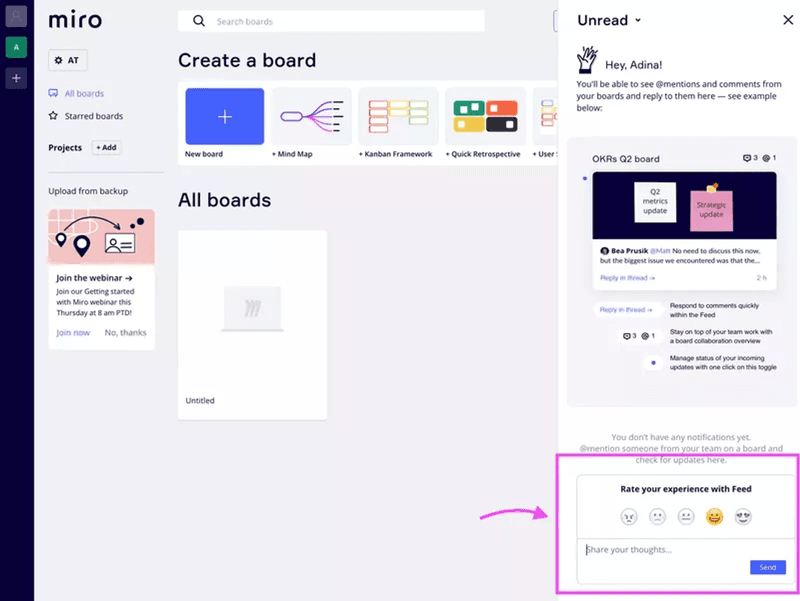

12. Miro’s passive customer satisfaction survey example

Miro’s customer satisfaction survey is a brilliant one because it’s always on. That means it is designed to blend in with the UI design as a passive feedback collection method.

So, instead of triggering the survey and interrupting the user, Miro embeds the CSAT survey into the customer experience and allows the user to decide when to fill it in.

Of course, you’d expect nothing less from Miro when it comes to visual presentation—that’s what their brand is about. So, using emojis is no surprise here.

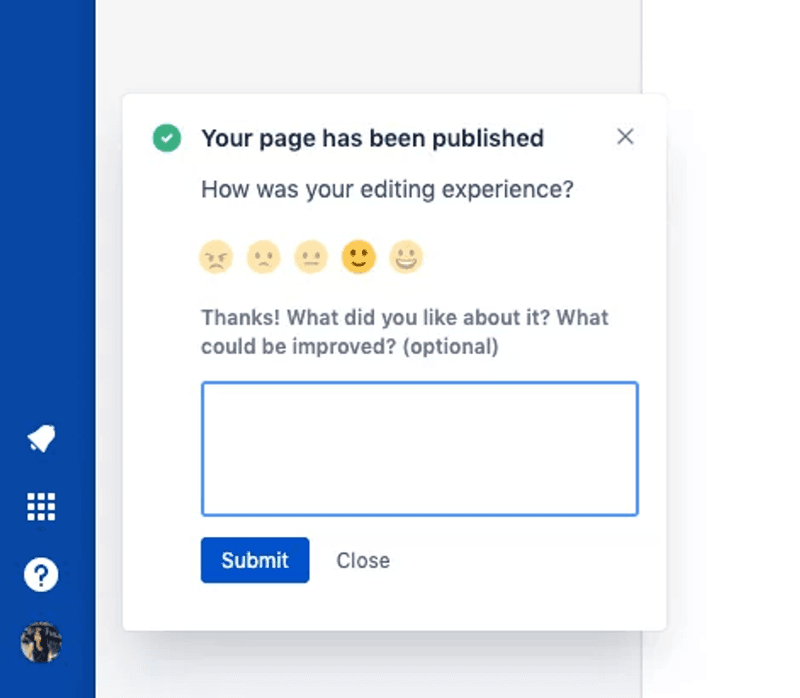

13. Jira’s real-time in-app customer satisfaction survey example

Collecting feedback in real-time, i.e., while the user is still in the experience or right after the interaction, is critical. The experience is still fresh in their minds, so the feedback is more accurate and genuine.

That’s why Jira doesn’t waste time and collects feedback right after the user engages with the feature. Surveys are triggered in-app and pop up as a modal.

14. Insurify’s visually appealing NPS survey

Insurify NPS survey asks customers how likely they are to recommend their product to a friend or colleague. This survey is sent via email in an attempt to reach both active and inactive users.

What makes this survey stand out is its design – it’s simple but still packs a punch. The color gradient on the scale helps users pick a number easily, while the illustration adds more substance to the email survey.

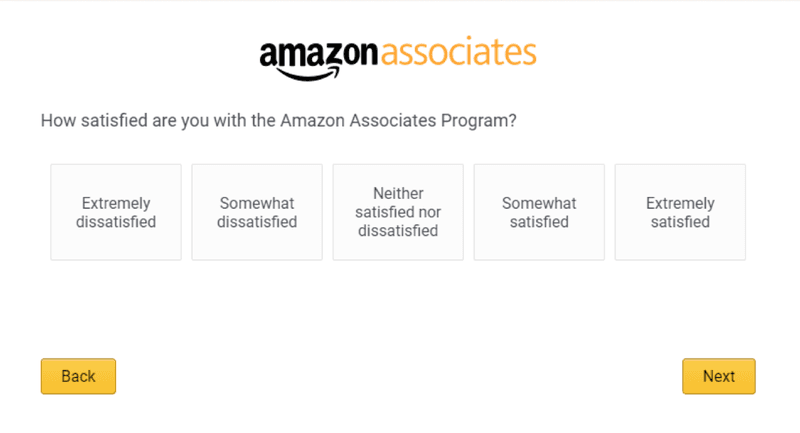

15. Amazon’s simple customer satisfaction survey

Amazon uses a straightforward customer satisfaction (CSAT) survey to gauge user sentiment. The survey asks a direct question: “How satisfied are you with the Amazon Associate Program?”

Customers respond on a simple 1–5 scale, where 1 represents “extremely dissatisfied” and 5 represents “extremely satisfied.”

This type of survey is easy to design and implement, making it versatile to measure satisfaction at key touch points throughout the customer journey. The best part is it provides actionable insights with minimal effort from both your and the user’s end.

20 customer satisfaction survey questions to leverage

Here is a list of 20 questions organized into five distinct categories to help you assess user satisfaction at different stages of the user journey.

General satisfaction questions

- How would you rate your overall experience with us?

- How easy was it to use our [product/service]?

- How likely are you to renew your subscription?

- What do you like most about our product?

- How easy was it to [complete a specific task]?”

Customer loyalty questions

- How likely are you to continue using our [product/service] in the future?

- Are you considering switching to a competitor? If yes, why?

- What is the primary reason you continue to do business with us?

- Would you recommend our product to a colleague or friend?

- What factors would increase your loyalty to our company?

Customer experience questions

- How easy was it to find the information you were looking for on our app?

- Did our product perform as you expected?

- What is one thing that frustrates you the most about our product?

- How often would you like to hear from us regarding updates or offers?

- What aspect of our customer experience could be improved?

Customer service questions

- Was the support representative able to resolve your issue?

- How satisfied are you with the support you received?

- How quickly was your issue resolved?

- Did the support team provide helpful information?

- How can we improve your support experience?

Best practices for creating customer satisfaction surveys

Creating surveys to gauge customer satisfaction isn’t about asking a bunch of questions to the users. Sure, asking the right questions might boost the response rate initially, but that won’t be enough to build a positive feedback culture in the long run.

Follow these best practices to create surveys that engage users and help you improve the overall quality of feedback:

Keep the customer survey questions short and simple

The shorter the survey, the more likely your customers will complete it.

A lot of companies make the mistake of creating long, drawn-out surveys, which often leads to survey fatigue as the respondents tend to lose focus mid-way. The result? Users provide rushed answers, or worse, abandon the survey entirely.

That’s why you need to keep the surveys short, focused, and easy to understand.

Limit the number of questions between 3-5 for a higher completion rate. Make sure the questions are in clear, straightforward language, free of technical terms or industry jargon that could confuse respondents.

For example, instead of asking, “How would you rate the UX of our application?” say, “Was it easy to use our app?”

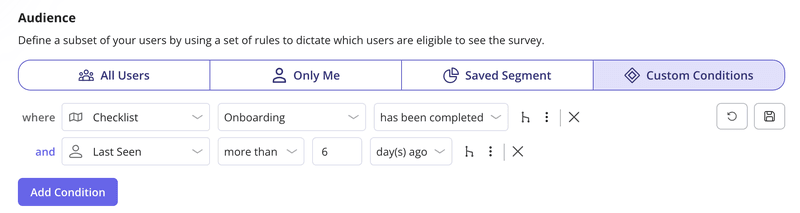

Segment your users before sending surveys

The effectiveness of your surveys hinges on delivering them to the right users. That’s where segmentation comes in. It ensures your surveys are relevant, personalized, and actionable.

Segment your customers based on their in-app behavior, company attributes, or even previous feedback.

You can then determine which surveys should be sent to which users.

Let’s say you’ve created an onboarding checklist that you’re proud of and want to know if users find it helpful. For this, it makes sense to create and send a survey to only those users who’ve completed the checklist in the first place.

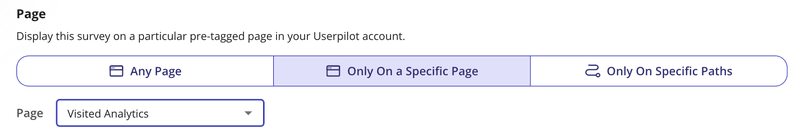

Trigger customer feedback surveys contextually

Reaching the right people with your survey is not enough. It is also important to consider when the survey will appear on their screen.

Your surveys will not only have a higher response rate if triggered contextually but also will give you more actionable insights.

Imagine a scenario where the user has started their free trial but didn’t sign up for a few days and then one day decides to give you a shot. Once they log in, an NPS survey pops up and asks them whether they would recommend you to their friends.

The user hasn’t even experienced any value with your app, how would they know if they like you enough to refer to others?

But if you triggered the survey contextually – for example, when interacted with a key feature and completed their JTBD, it would make much more sense to do it.

Follow up with customers and close the feedback loop

Don’t leave customers hanging after collecting feedback. Appreciate them for their response and let them know you’re working on the complaints.

For cases where you can’t make any immediate changes, still communicate with users. Taking this approach ensures you build trust and maintain user willingness to provide feedback.

Whether the feedback is positive or negative, it’s important to let customers know how their input is shaping your product. Positive feedback? Ask them for a review. Negative feedback? Interview them for additional insights. Feature request? Add it to the backlog.

Conclusion

When done right, customer satisfaction surveys can uncover how your users truly feel about your product, helping you address potential issues before they lead to churn.

We’ve shared top CSAT survey examples from leading companies to inspire you.

Ready to create your own customized surveys—without writing a single line of code? Book a Userpilot demo today and start collecting actionable feedback that drives results!

FAQ

What is a customer satisfaction survey?

A customer satisfaction survey is a tool used to measure how happy or satisfied customers are with your product, service, or overall experience. It helps businesses gather actionable insights directly from their users, ensuring they can make data-driven decisions to improve customer satisfaction and retention.

Why are customer satisfaction surveys important?

Here’s why customer satisfaction surveys are important:

- Identify customer pain points: These surveys help you pinpoint what’s working and what isn’t, allowing you to address issues before they lead to churn.

- Enhance customer loyalty: By understanding and acting on feedback, you show customers their opinions matter, building trust and long-term loyalty.

- Drive product improvements: Surveys provide insights into customer needs, helping you prioritize features or fixes that matter most to your users.

- Boost engagement: A well-timed survey can engage users by demonstrating your commitment to improving their experience.

- Measure success over time: Regular surveys allow you to track satisfaction trends, so you can gauge the impact of changes and identify areas for further improvement.

What are the different types of customer satisfaction surveys?

The three main customer satisfaction surveys are CSAT, NPS, and CES surveys.

- Customer satisfaction score surveys ask users to rate their satisfaction with a product, feature, or service on a scale, typically from 1–5 or 1–7.

- Net promoter score surveys measure customer loyalty by asking, “How likely are you to recommend our product to a friend or colleague?” Responses are scored on a scale of 0–10 and categorized as promoters, passives, and detractors.

- Customer effort score surveys measure how easy it is for users to interact with your product or resolve an issue.

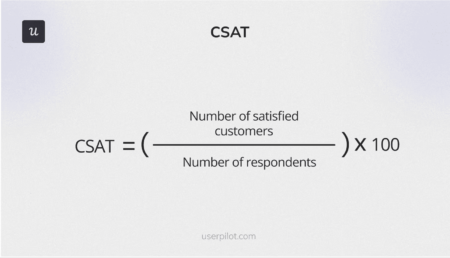

How to calculate CSAT?

CSAT is calculated by dividing the number of satisfied customers by the total number of respondents and multiplying by 100 to get a percentage.

Imagine you send a CSAT survey to 200 customers asking them to rate their satisfaction on a scale of 1–5. In this survey, 150 users responded with a score of 4 or 5 (considered positive). So, the CSAT will be 75%.

CSAT Score = (150 / 200) × 100 = 75%