Customer Satisfaction Survey Best Practises, Examples & Questions

Embracing customer satisfaction survey best practices is critical to driving business growth.

You’ll consistently generate quality feedback and see how to act on them to improve the user experience. This, in turn, will motivate users to stay with your brand, driving long-term loyalty and retention.

What are some of these best practices, and how can you implement them?

This article answers all that. We discussed best practices and shared examples from top SaaS companies.

What is a customer satisfaction survey?

A CSAT survey is a tool businesses use to assess customer perceptions and satisfaction levels regarding product functionality, customer support, and other aspects of the company.

Why customer satisfaction surveys are important

Customer satisfaction surveys provide valuable insights and contribute to driving growth. Here are three benefits of conducting and analyzing CSAT surveys:

- Improve customer experience: By conducting customer satisfaction surveys, you gain direct feedback from your customers, allowing you to understand their needs, preferences, and pain points. This insight shows you changes you can make to boost the customer experience.

- Increase customer and client retention: By making regular product improvements, your customers will enjoy your platform and be more willing to stick around.

- Measure customer loyalty: Track customer loyalty over time, evaluate the effectiveness of your customer retention strategies and identify opportunities to strengthen relationships throughout the customer lifecycle.

12 customer satisfaction survey best practices for collecting insightful data

How you approach your surveys determines the quality of responses and insights you can generate.

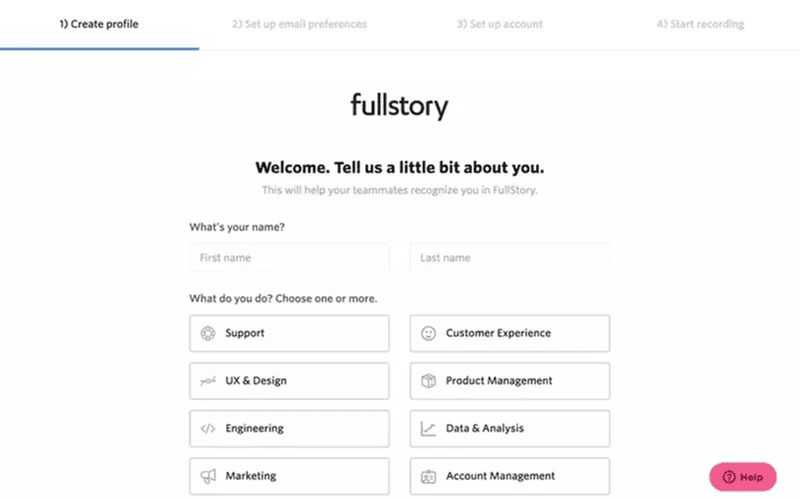

Use different types of surveys based on where the user is in the journey

Sending surveys contextually helps you collect more accurate data.

Here are three survey types for different touchpoints:

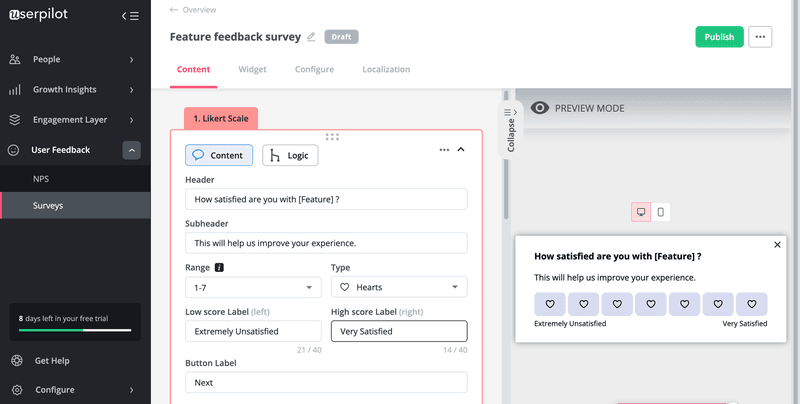

You can trigger CSAT right after a user engages with a feature. The aim of this survey type is to measure the customer’s overall satisfaction with their experience. You can use a simple question like the one below:

CES surveys help you measure the perceived effort of an interaction. Trigger it after specific customer events to identify friction points.

For example, by triggering questions that evaluate the ease of a support interaction, you can know how your support team is performing and probably follow up to know improvements that customers want.

Another example: CES surveys triggered after account renewals can help you spot friction in your payment process and avoid involuntary churn.

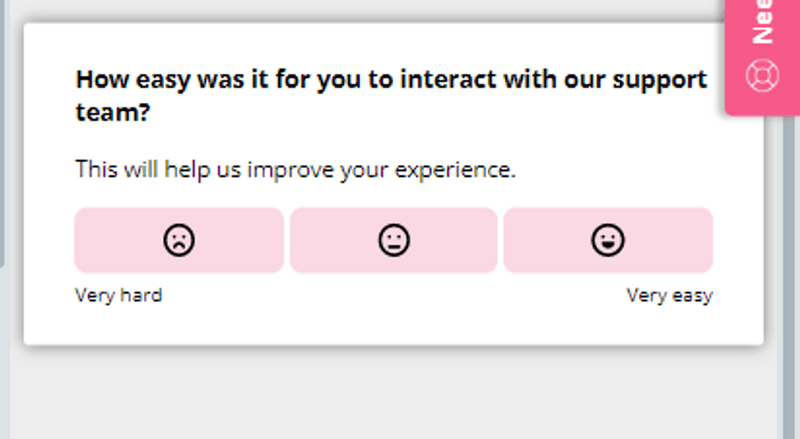

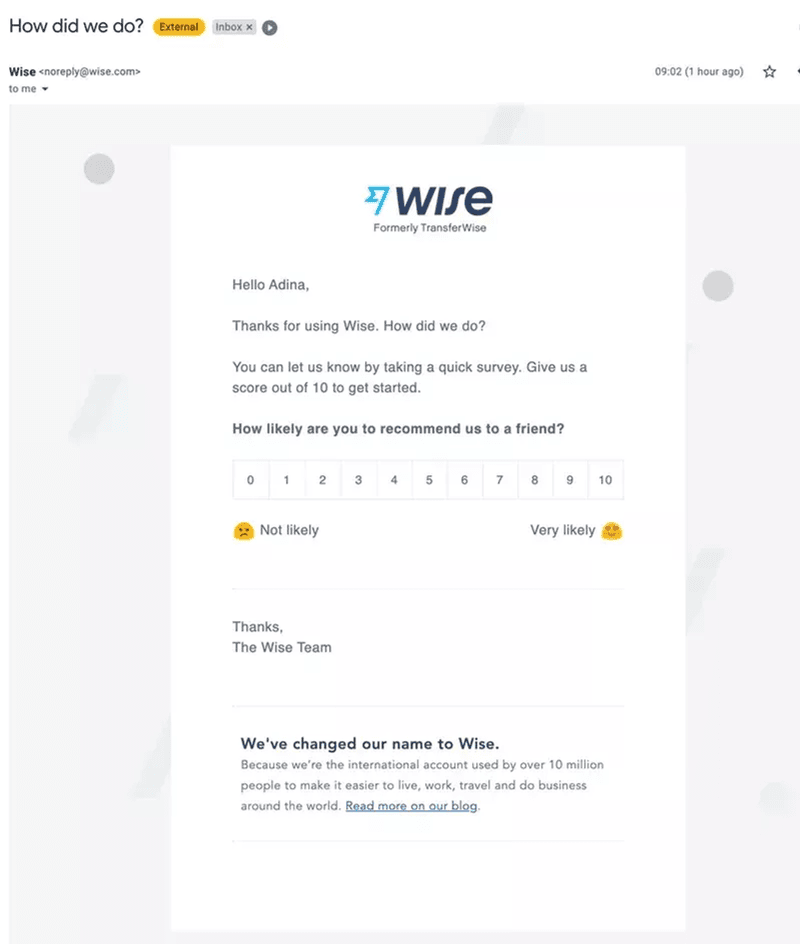

With NPS surveys, you can measure how a specific interaction affects customer loyalty or measure overall customer loyalty over time. You need both approaches to keep track of product health and ensure customers are always happy.

NPS evaluates customer loyalty by using an 11-point scale to ask how likely they are to recommend the business.

Use multiple question types in your customer satisfaction survey

It’s important to keep in mind that customers aren’t always excited about answering survey questions, so you want to make it as easy for them as possible.

Use close-ended questions to get quick answers from customers. These are survey questions with multiple choice answers, yes/no options, or rating scales that customers can answer with a single click.

Employ open-ended questions to give customers space to describe their experiences and ideas in their own words. These questions have comparatively low response rates but provide actionable insights that can serve as improvement opportunities.

Remove bias from your customer satisfaction survey questions

Phrasing matters when it comes to customer surveys. Ensuring your tone and wording don’t influence or hint at a particular answer will give you more accurate data.

Avoid the following bad survey questions at all costs:

- Leading questions: These questions are phrased in a way that suggests a particular answer or guides the respondent toward a specific response. For example, the question, “Wouldn’t you agree that our platform is the best choice for improving your team’s productivity?” assumes the user considers the platform their best choice.

- Loaded questions: These customer satisfaction survey questions contain unwarranted assumptions or biases and aim to provoke a specific emotional or defensive response. Example: “Given the intense competition, how confident are you that our software can actually boost your sales?”

- Double-barrelled questions: These questions combine multiple questions or ideas into a single sentence. They make it challenging for the respondent to provide distinct answers for each part of the question. Double-barrelled questions potentially lead to confusion or incomplete responses. Example: “Are you satisfied with the speed of our software and the level of customer support?”

Collect customer satisfaction data using both active and passive surveys

As the name suggests, an active survey is a company-initiated approach to feedback collection. Here, you directly ask customers for feedback through in-app forms or email surveys.

Active surveys are contextual and great for capturing valuable real-time feedback while the memory is still fresh.

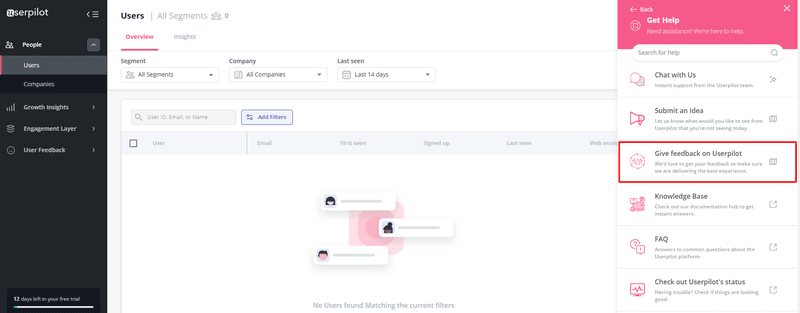

Reactive or passive surveys include always-on widgets that allow users to submit feedback when they want to. This approach builds trust and confidence because it shows users that you are always open to their feedback.

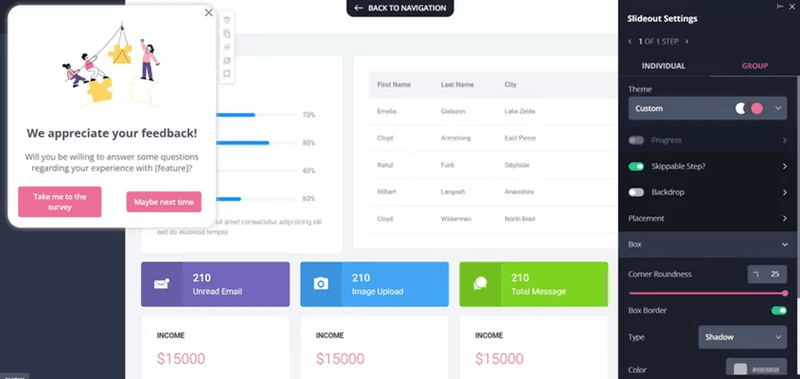

Pre-notify users before sending lengthy customer surveys

Long surveys often distract customers from what they’re doing and can frustrate them. When the user is frustrated, they either won’t complete your survey or do it in a rush, skewing the results either way.

So always ask for consent first to ensure a frictionless survey experience and increase the survey response rate.

You can use a subtle banner or slideout like the one below:

Include a progress bar for higher completion rates

Don’t stop at having the customer’s survey consent. Also, ensure they’re motivated enough to complete the process.

One way around that is to include progress bars in your surveys. It gamifies the survey experience and sets expectations.

With progress bars, your users will be more likely to push till the end because they can see their progress and estimate the steps left.

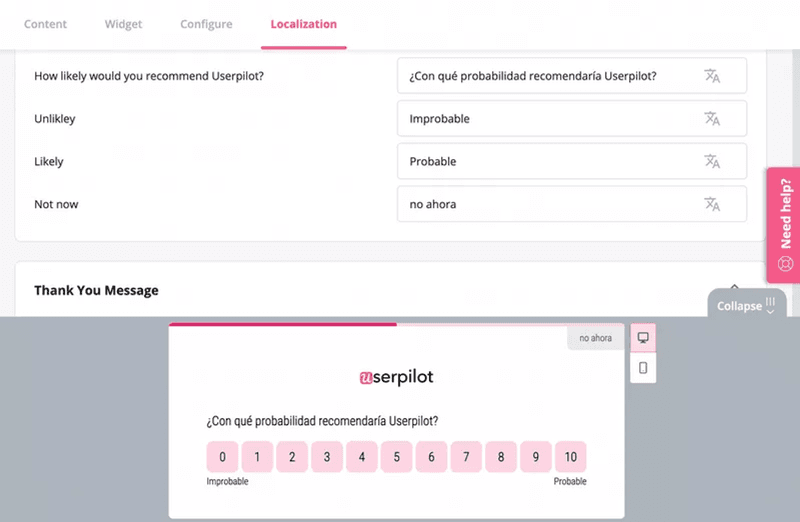

Make the survey more relevant to different target audiences with localization

Localization is about adapting to the culture and language of customers in different target markets. It helps you break the linguistic and cultural boundaries to collect more accurate data.

Asides from the accuracy of your data, localization generally improves the survey experience.

You can hire a localization team to help translate your content. Or use tools that allow you to localize your surveys. Userpilot is a good example:

Measure customer satisfaction across different channels

Diversifying your survey channels lets you compare customer satisfaction across various channels at different touchpoints. You’d gather enough data to make important decisions concerning the customer experience.

Send surveys via:

- Social media

- Website chatbots

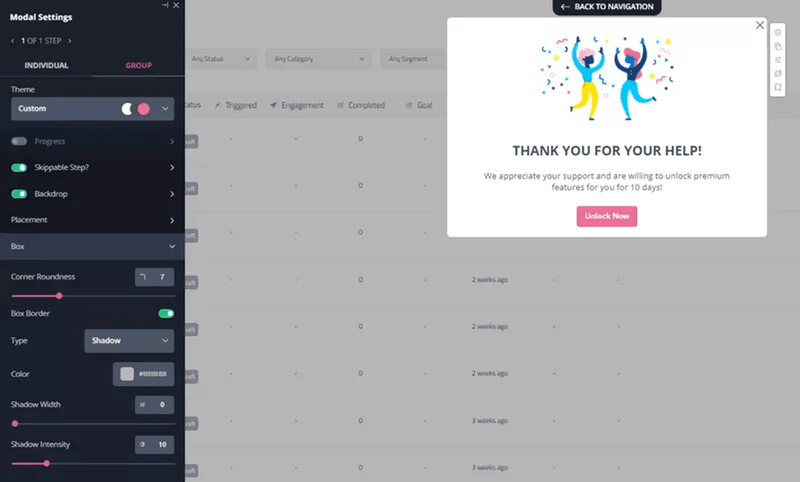

Offer incentives to survey respondents when collecting in-depth feedback

Incentives are a good way to show gratitude for the customer’s time and effort. Valuable ones will motivate customers to answer your future surveys without much push.

However, do this only when asking for detailed feedback from your loyal customers. Otherwise, respondents will become incentive-driven and just pass surveys to get something in return.

What incentives can you offer?

It depends on your product and audience, but discounts and access to exclusive beta features generally work well.

Test the survey with a small group before sending it to your users

This strategy is a good way to get quick feedback on your survey design/questions and ensure you’re getting the desired effect.

For example, after testing your surveys with company members or a small segment of users, you might discover you’ve asked leading or loaded questions.

By adjusting based on feedback, you’ll increase the response quality when the survey gets to the rest of your user base.

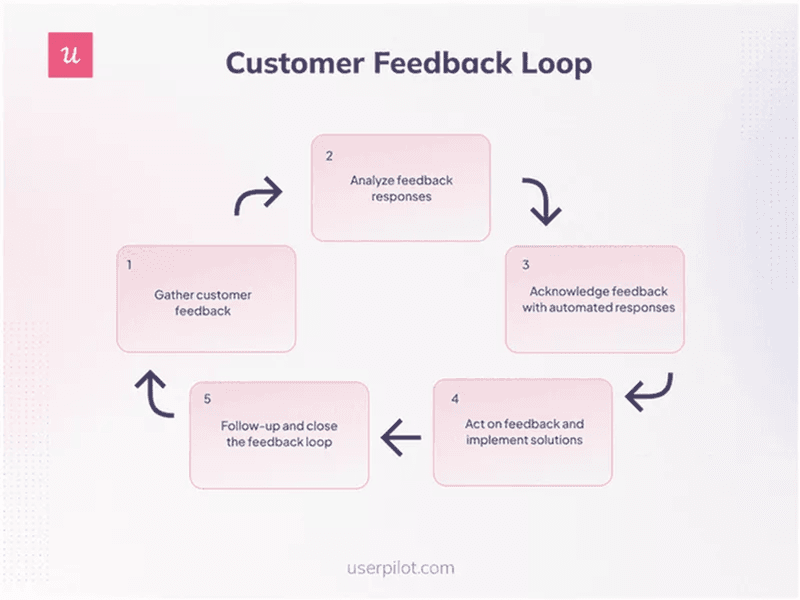

Close the loop to build customer loyalty

The feedback loop remains open until you get back to customers. And refusing to close the loop can reduce customer trust, as users will feel their opinions aren’t valued.

So, keep communication open after receiving feedback. This can look like:

- Collecting more details to gain clarity

- Apologizing if needed and letting users know you’re working on the problem

- Implementing solutions and informing users

Best customer satisfaction survey examples from SaaS brands

This section shows you examples of SaaS brands that applied some of the above best practices to their surveys.

Hopefully, this gives you enough inspiration to create yours!

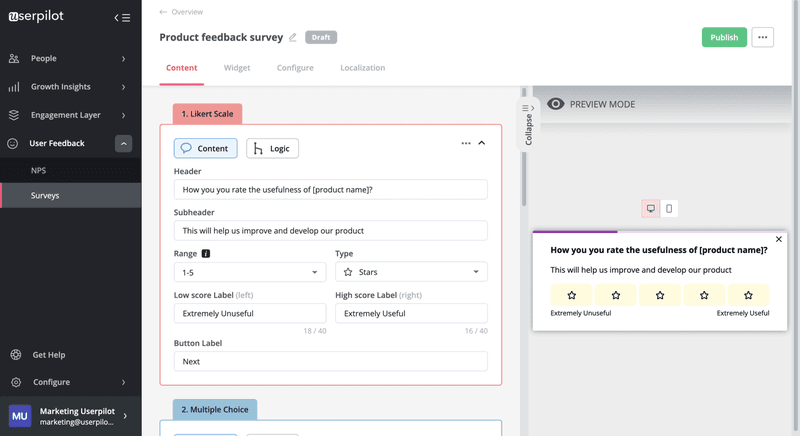

Userpilot’s overall customer satisfaction survey

This CSAT survey helps understand user satisfaction in a given period.

It’s a Likert survey with a simple star rating, making it easy for customers to respond. Adding a progress bar was a good move to ensure users advance to the next question.

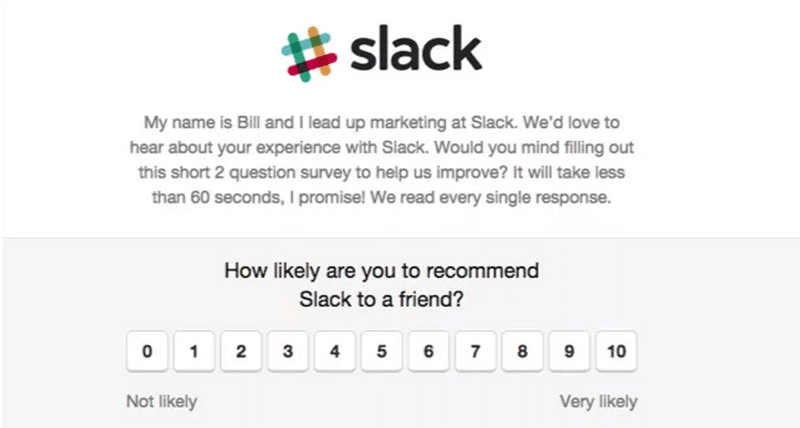

Slack’s personalized relational NPS survey

The NPS survey below wouldn’t be bad without the first paragraph.

However, adding a note from the marketing manager right before the numeric scale personalizes the experience and makes it feel like a conversation with a friend. Even disinterested users will be moved to submit accurate feedback.

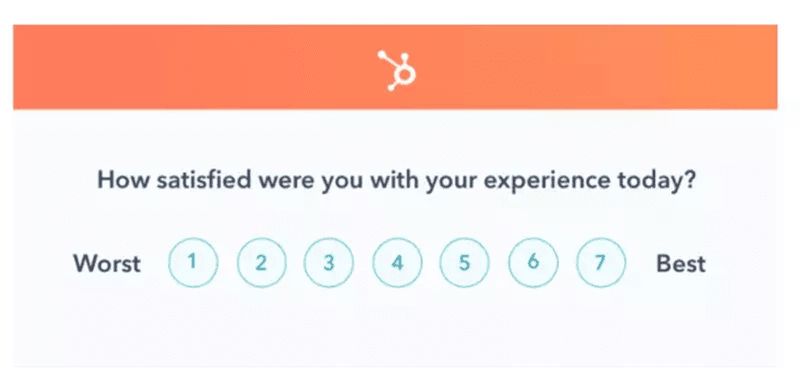

Hubspot’s simple in-app CSAT survey

Hubspot uses these simple surveys at multiple touchpoints to collect customer satisfaction data at a granular level.

The surveys have company branding and blend seamlessly into the UX.

By making surveys match the design and aesthetics of their platform, Hubspot is providing users with a positive experience. Response rates will also be high as the customer won’t see the survey as something foreign.

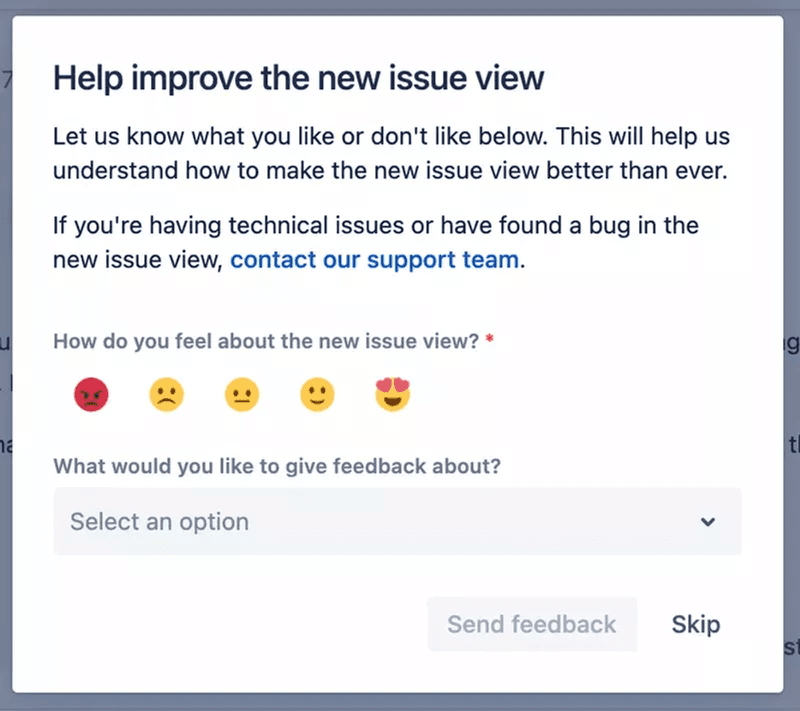

Jira’s multi-question new feature survey

First, Jira begins the survey with a catchy header. Then a subheader that further explains the header and provides users with a support link if they want to report any issue.

The survey is contextual, triggered only after users interact with the newly launched features. Using different types of questions was also a smart move. Users can quickly respond with smiley faces and provide qualitative feedback if they want.

Hubspot’s mid-onboarding email survey

This last one is an example of using external channels for your surveys.

Hubspot sends mid-onboarding email surveys to collect customer opinions about the onboarding process and improve.

But this email isn’t just about feedback. It reminds new users of the platform and redirects them to the app so they can continue exploring and stick around.

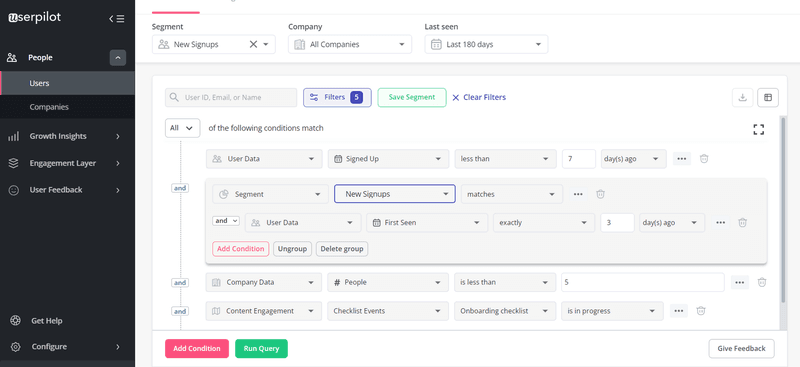

Creating customer satisfaction surveys with Userpilot

Userpilot is a product adoption tool with the features you need to build and analyze effective in-app surveys.

Build and customize your customer satisfaction survey template

Userpilot grants you access to customizable survey templates that will save you time and energy. You can also build from scratch if you want.

Additionally, our platform lets you add progress bars, customize surveys to your brand, automatically trigger follow-up questions, etc.

Analyze survey responses over time

With Userpilot, you can visualize your survey responses on a dashboard, making it easy to analyze survey results.

Also, you can see survey responses over time, which lets you quickly identify trends, patterns, and whether you need to tweak your questions for better results.

Conclusion

Customer satisfaction surveys go beyond just phrasing and rolling questions out to customers. Do it wrongly and you’ll be getting inaccurate results that deprive you of insights for driving real growth.

Embrace tools like Userpilot to make things easier. Get a demo now and see how to start applying customer satisfaction survey best practices.