What is A/B testing in product management?

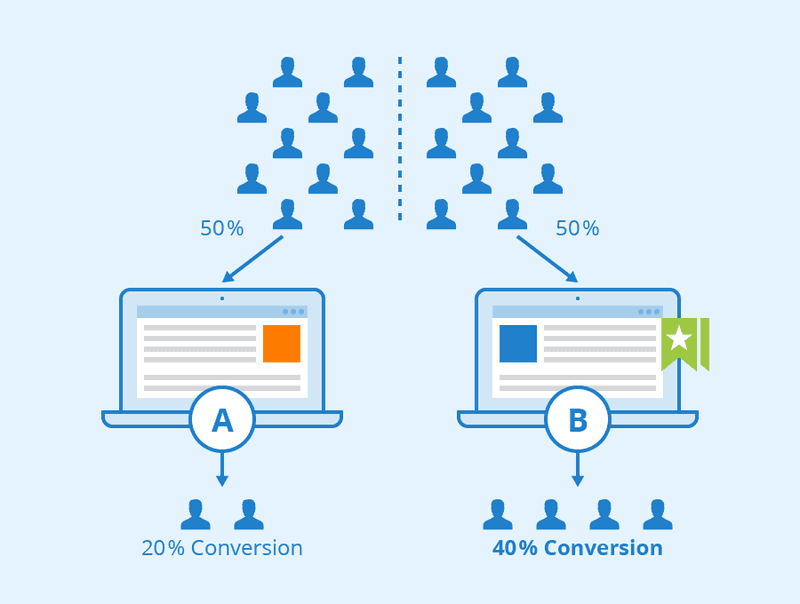

In A/B tests, two items or variations are compared to one another to see which performs better.

Product managers use A/B tests to develop products that will resonate with customers. A test’s purpose is to identify the best ways to improve your product. Maybe you come up with an idea for an improvement, or you can’t decide between two options. Testing is the only method for making an evidence-based decision.

A/B testing vs. multivariate testing

A multivariate testing method involves testing variations of multiple variables at the same time to determine which combination of variables has the best performance among all the possible combinations. These tests are more complicated than standard A/B tests and are best suited for companies with a large user base.

There is no set right or wrong answer when it comes to multivariate and A/B testing; rather, they are two different approaches to solving the same problem.

A/B testing versus usability testing

Usability testing and A/B testing are both aimed at improving conversion rates.

In a nutshell, usability testing reveals users’ motivations for their actions. Meanwhile, A/B testing is used to determine what works best on your app.

During a usability test, it is determined how people interact with the product, what problems they encounter during the process of achieving the desired outcome, and how they can be improved. During A/B testing, two or more versions of a product are compared to determine which performs better and has more conversions.

Why is A/B testing important for product managers?

A/B testing empowers the product manager to make data-backed decisions for everything they do within the product. This enables them to optimize the user experience to lead to higher conversion and retention rates. Let’s delve deeper into how this works:

Enable decision-making based on data from real users

A/B testing shows you real data from your representative users and saves you from jumping to assumptions.

You’ll be more confident following hard data than your gut feeling—and this is important because every move you make in product development counts.

Understand the user behavior and optimize the user experience

By comparing multiple versions, you will get insights into how your customers engage with your product. This will enable the product manager to determine what problems they encounter in each version that makes them choose the other one over it. You can then apply your learnings to the remaining aspects of your product and user base to improve the user experience.

Boost user engagement and increase user retention

A/B testing lets you know what your representative users like the most. Exposing these to the rest of your users will drive better engagement because it’s what most want.

Repeated engagement results in product adoption and the latter translates into retention in SaaS.

A/B testing in product management use cases

There are multiple use cases for A/B testing in a SaaS product. You can test the performance of your in-app messages, educational content, and other elements of the product.

Product messaging

Display variations of your product messaging to see which resonates the most with users. Test for design and copy length. You could also test different formats —for instance, if feature adoption is higher in the group that got a pop-up announcement versus introductory tooltips then you’ll know which approach is optimal.

You can segment users into different cohorts by their jobs to be done, milestones reached, and other characteristics and show relevant messages to each user segment:

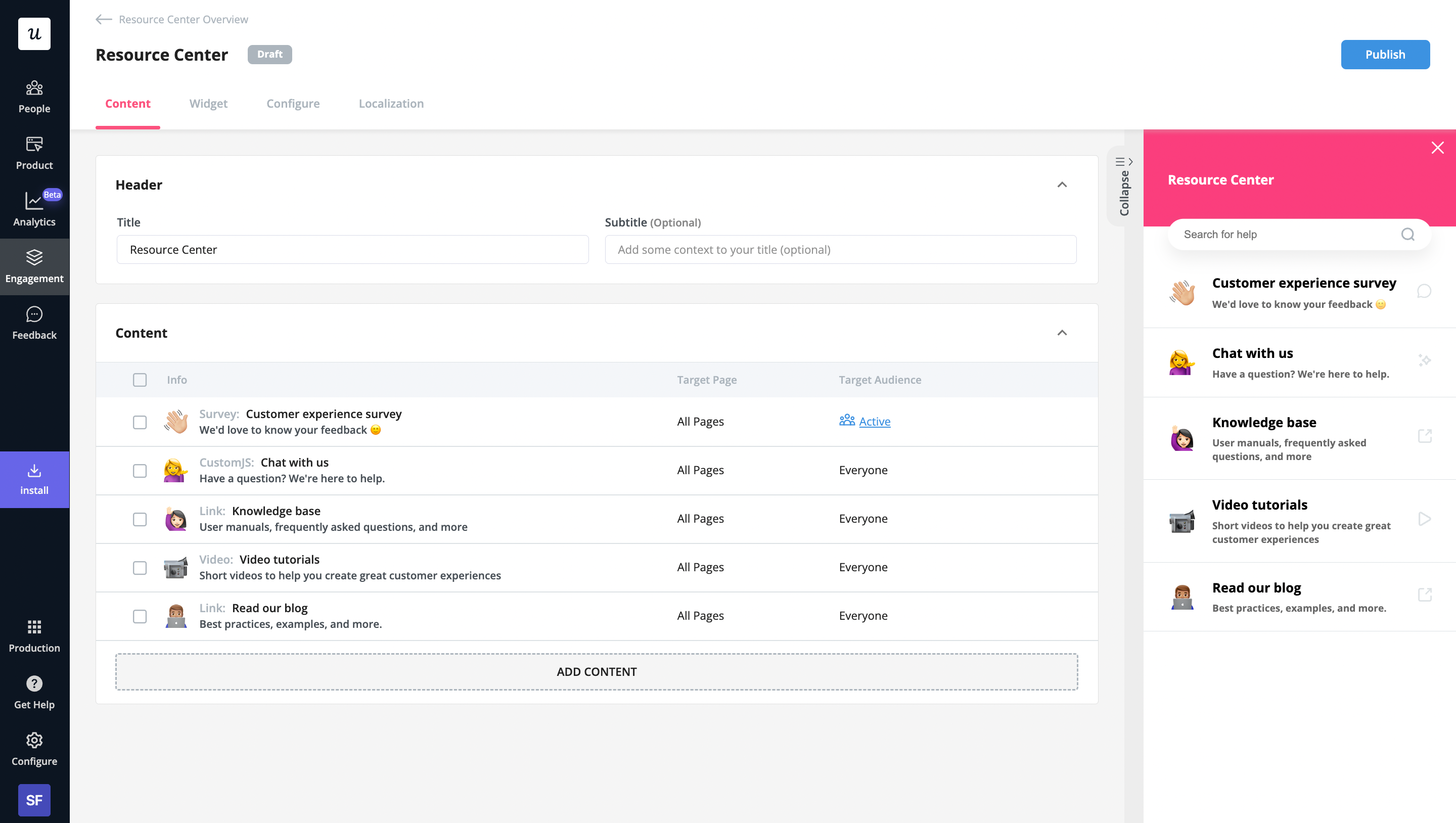

Resource center

Testing the effectiveness of your educational materials can help you discover what helps your customers learn more effectively. Do they prefer visual content such as video tutorials? Or maybe a FAQ section is what they engage with more. You never know until you test.

Once you determine which type of content they interact with and prefer, you will create more to it.

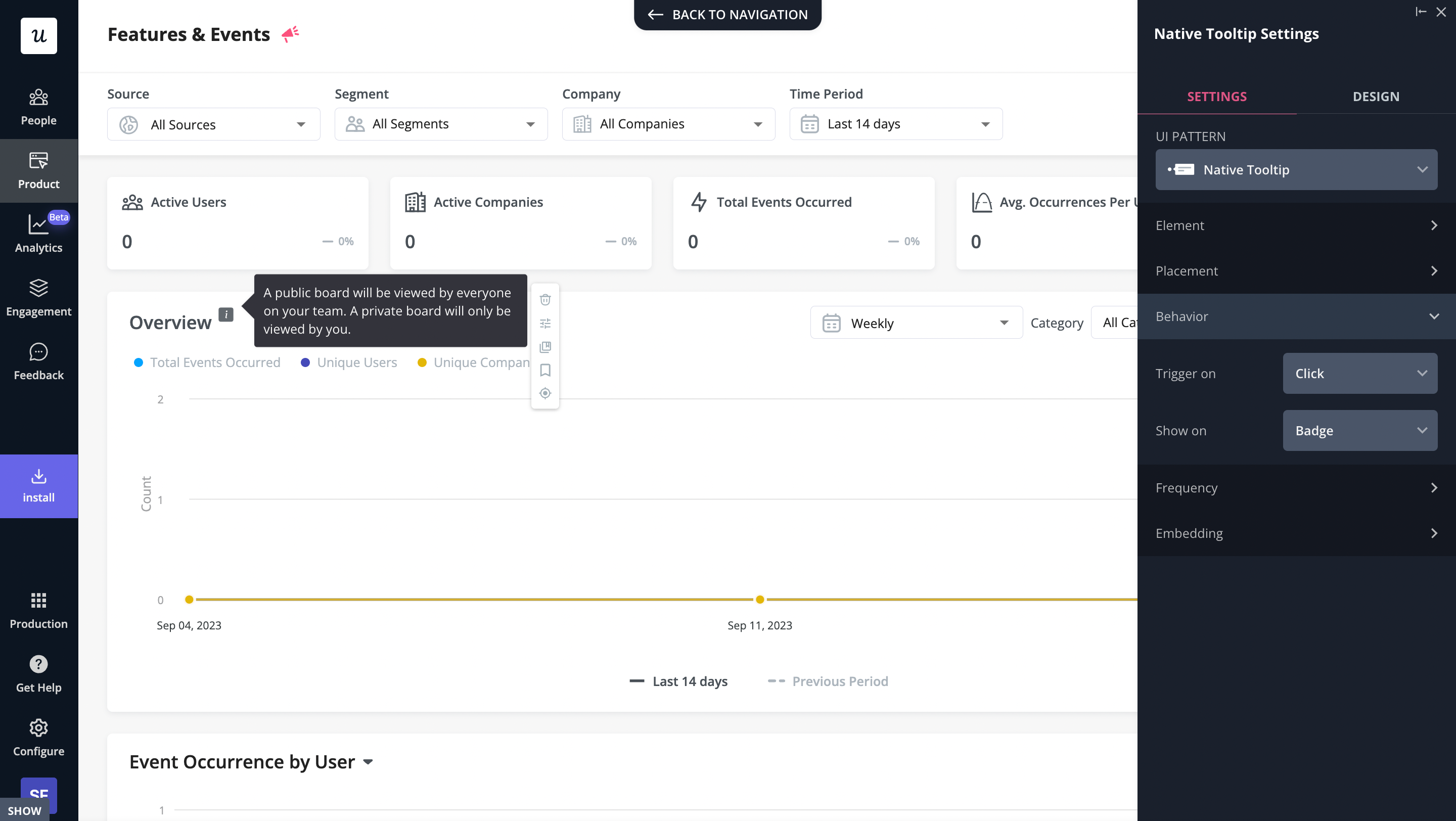

Onboarding flows

When it comes to onboarding, you want to shorten the time to value and get users to the aha moment as quickly as possible.

A/B testing is a fantastic capability for gathering valuable data on which patterns are the most effective – this is crucial for generating more user engagement. You can tailor your onboarding experience accordingly and understand how different in-app experiences help users reach milestones faster.

Show the slideout to half of your users, and the tooltip to the other half. You can experiment with different call-to-actions, in-app experiences, tutorials, or even progress bars to find out which version helps retain more users.

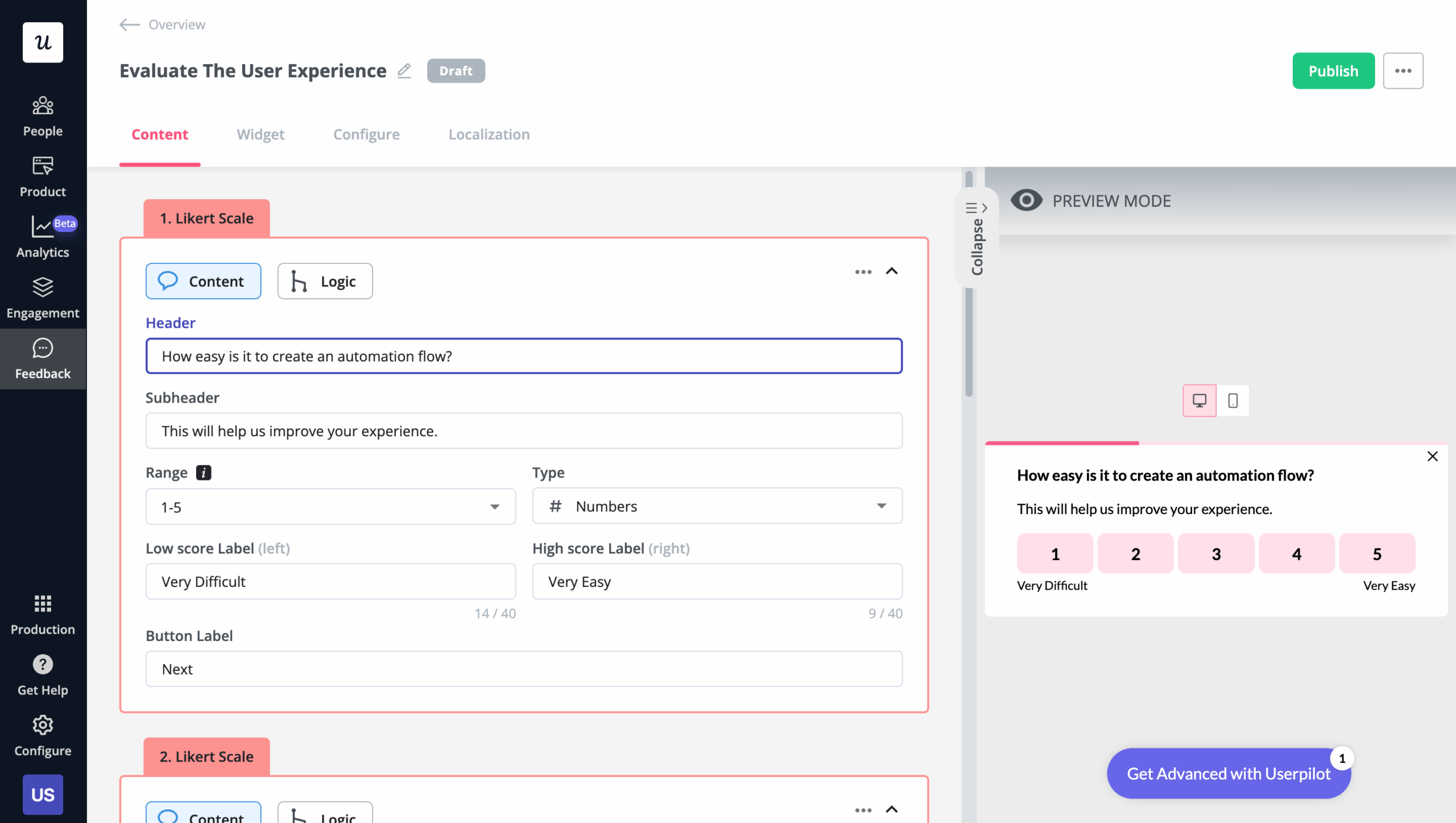

User feedback surveys

Experiment with different question types to see what type is more engaging to customers. Try different ways of triggering surveys, in-app or by email, and use different lengths of surveys to find out which combination is most likely to get filled out.

You can even test which surveys are preferred by users – those that drive quantitative data via close-ended questions or qualitative data via open-ended questions.

Testing multiple versions of landing pages

A/B testing is probably used most for this purpose. Testing A/B on a landing page means running multiple tests and comparing two slightly different versions of the same page to see which one has a better bounce rate.

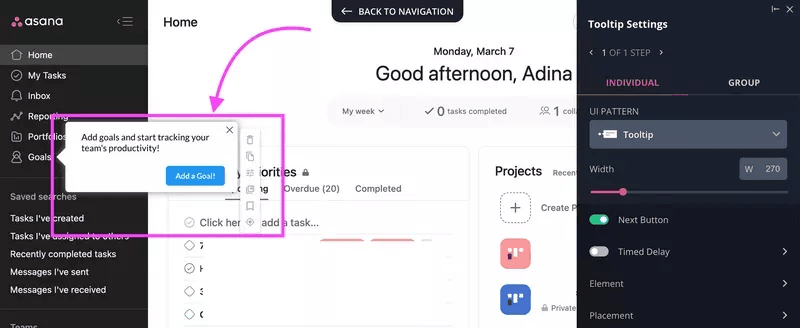

Test new features before building

This method is called fake door testing and it’s slightly different from the typical A/B tests but can also be considered a subset of it. The difference is that you’re not comparing two variations— you just want to test a new feature before rolling it out.

The idea is to pretend to launch the feature and assess the market demand for it.

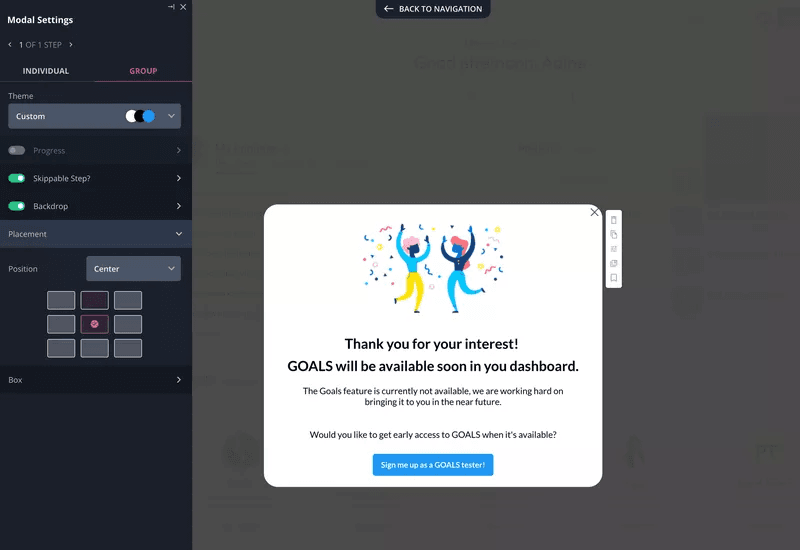

Check out the fictional Asana fake door test we built with Userpilot. Before implementing the new idea, Asana created a tooltip and demonstrated the feature to users. Then, by tracking the “Add a Goal” button, we could see whether it was something that many users would find useful and if it was worth creating.

After clicking the button, the tooltip lets users know that the feature isn’t available yet and invites them to beta testing.

How to conduct an A/B test

We discussed what A/B testing is and why it’s important for product adoption and engagement. However, how do you actually do it?

Develop a hypothesis and define key metrics

Start by deciding exactly what you want to test. You can’t run a successful A/B testing campaign if you don’t have a hypothesis you want to try out.

You also need to decide how the results will be measured. Imagine running a test whose results you later can’t track. That would have been a great waste of time and resources. For this, the PM define key metrics of a successful test.

That said, your hypothesis could be as simple as having an idea of what might improve your onboarding flow or drive feature adoption. The test comes in to validate your idea and avoid mistakes.

Design the test variations

Test variations are different versions of an existing item that have the elements you want to test.

Let’s return to our onboarding flow example. You could have an existing flow (the control) but hypothesize that adding or removing some elements will drive engagement and increase adoption. This hypothetical onboarding flow is the test variation, and you’ll run it against the control to compare the results.

Note that you can have as many test variations as you want.

Run the A/B test using a tool

It is now time to send out the different versions of your new feature to a randomly selected segment and see how they react. As a product manager, you’ll need to determine for yourselves the length of time to run the test, and how much information to collect.

You can run A/B tests manually, but you would need to make any changes to the code and even risk damaging it. So, it’s better to use a tool specialized for A/B testing (we’ll discuss some of them shortly).

Analyze the test results

The last step is data analysis. Collect all the data from your test and use them to determine which variation earned the most positive response or the greatest degree of engagement from your users.

The A/B testing tool you use should be able to handle any statistical analysis you need, so this last step shouldn’t be too challenging.

The best tools for running A/B tests

A/B testing tools make the product management process a lot easier, and there are many of them in the market. This section reviews some of the most effective A/B testing tools and highlights their strengths and weaknesses.

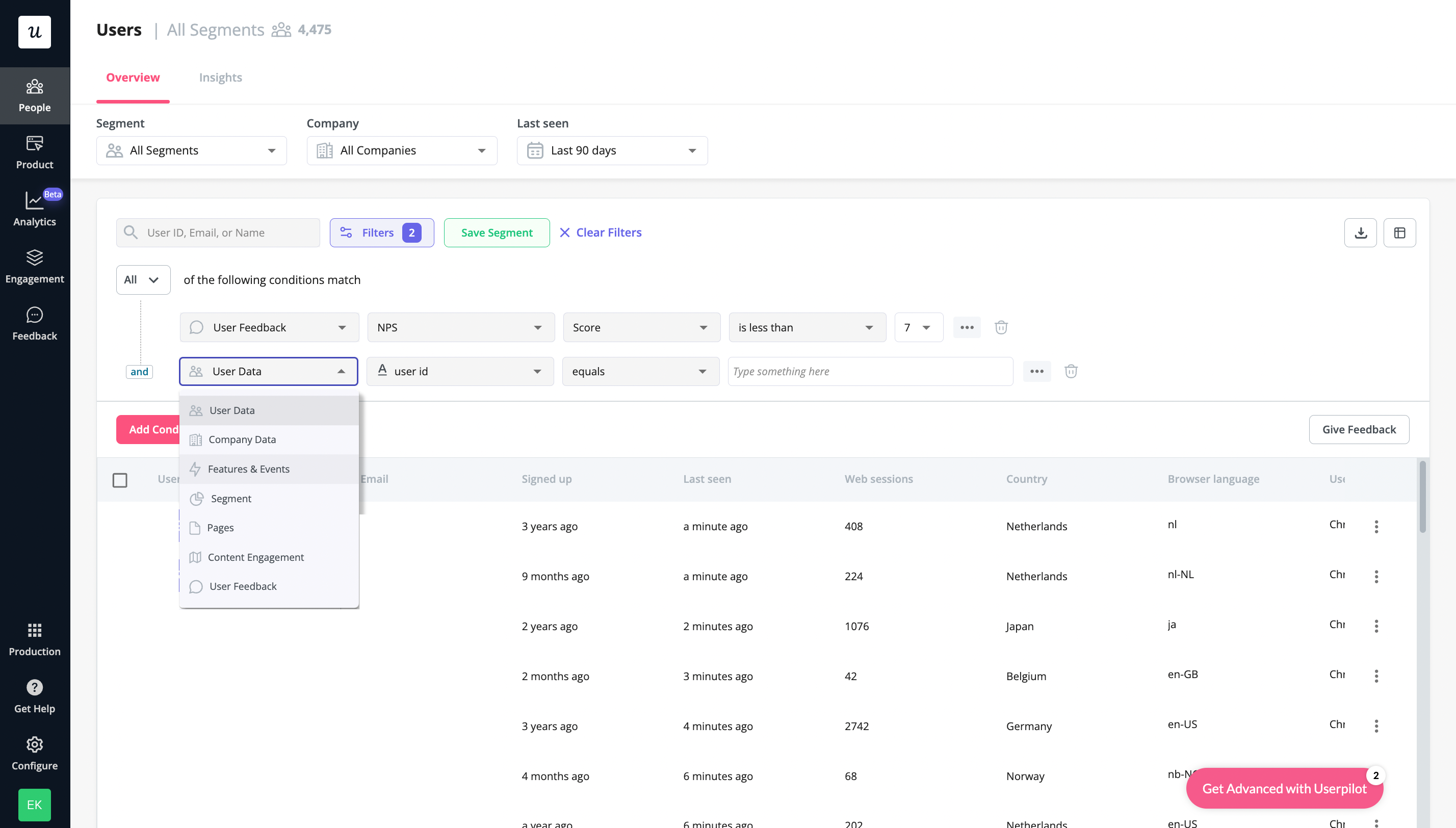

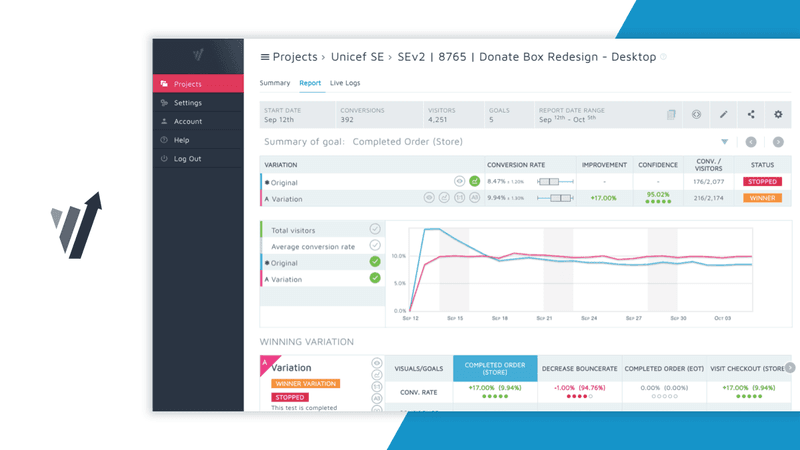

Userpilot

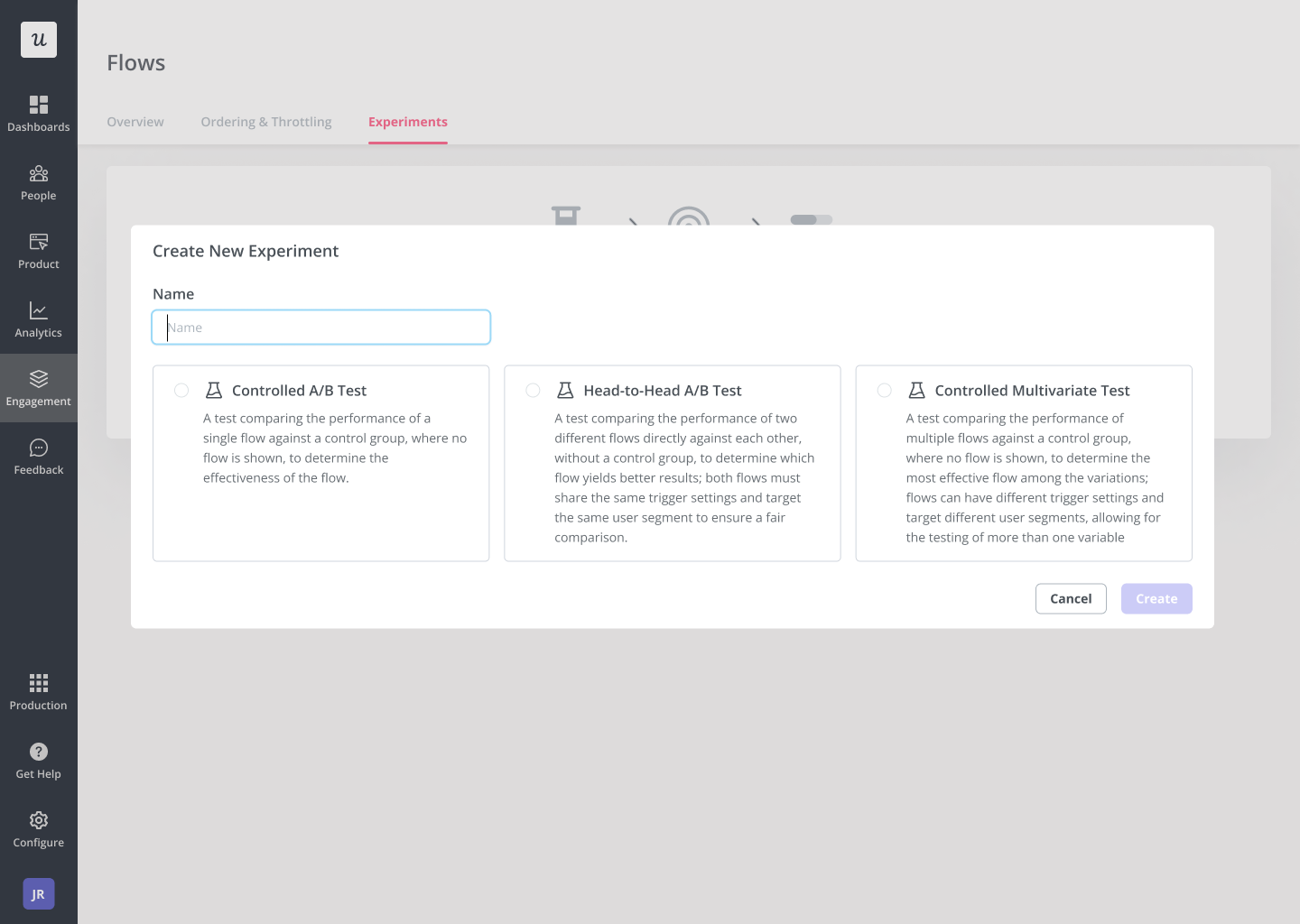

Userpilot is a product growth tool that hosts powerful A/B testing functionality. With Userpilot, you can conduct two versions of an A/B test as well as controlled multivariate tests.

Here is what you can test:

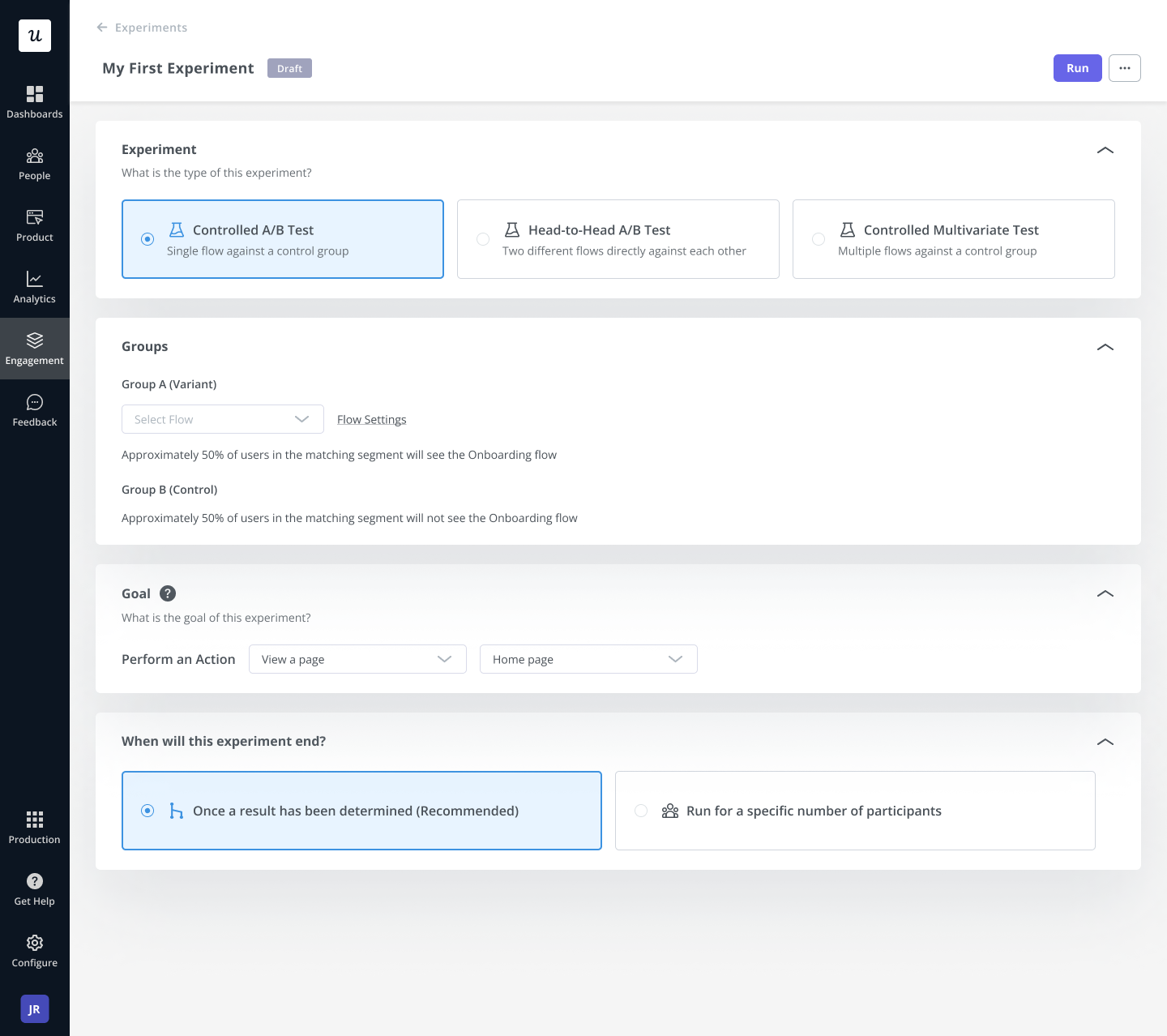

- Controlled A/B Test – compares the performance of one flow with that of a control group that does not see the flow.

- Head-to-Head A/B Test – compares two flows to determine which is more effective.

- Controlled Multivariate Test – compares the performance of multiple flows against a control group.

You can easily choose which flow you want to test, what the goal action for the test is (viewed a page/clicked a feature, etc.), and select a sample size of a certain number of users.

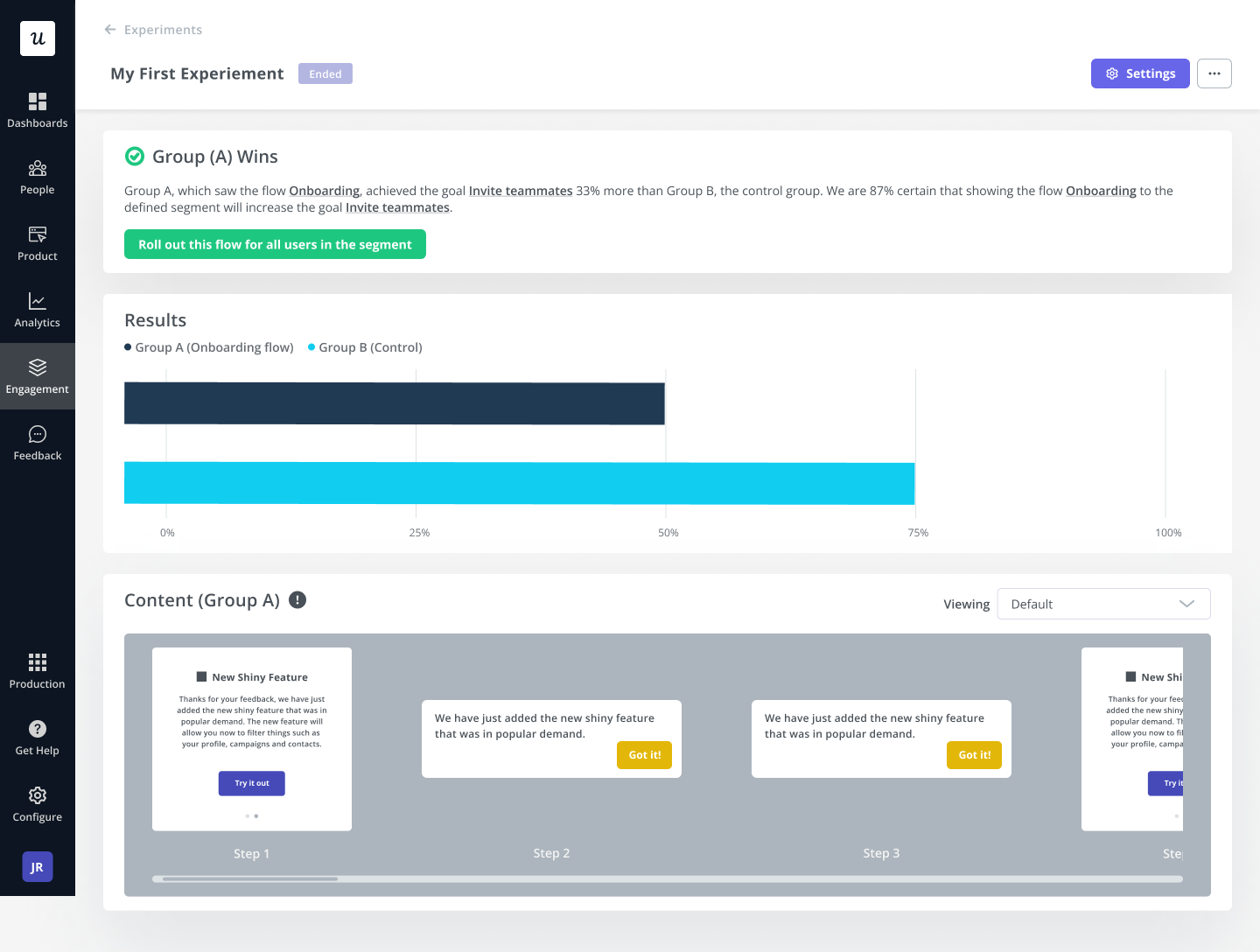

Once the test is complete, the test results can be viewed in a bar chart and you can easily see which group won and by which percentage.

A/B testing is available in the Growth plan and beyond. Book a demo to see the full functionality.

Omniconvert

Omniconvert is a growth marketing platform and Conversion Rate Optimization tool for e-commerce businesses. The software has A/B testing, advanced segmentation, and website personalization features—everything an e-commerce business needs to increase conversion rates.

Omniconvert allows you to run A/B tests on any device. The tool also has a debugging feature that helps you determine why your tests aren’t running as they should and helps identify any issues your test may have.

Pricing starts at $273 per month, but you can use a 30-day free trial to determine if the tool is worth it.

The downside: you need developers to integrate code into your app. Its conversion optimization platform is called Explore and Omniconvert calls this “the CRO tool for developers,” which is justified by a number of its specialist features.

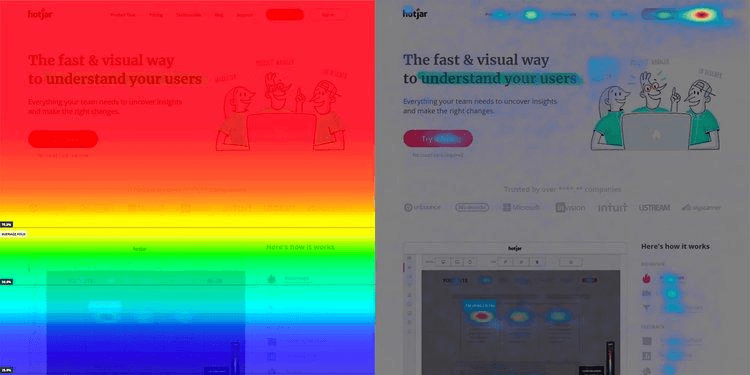

Hotjar

Hotjar is not an A/B testing tool but has features that complement A/B testing.

A/B tests help you quickly identify what your users like and dislike, but they lack context. They tell you what works and what doesn’t, but not why. By gathering qualitative product experience insights, product managers can fill the gaps and gain a deeper understanding of how the product is being used.

Hotjar is used to determine why customers prefer one variation to the other. The tool’s Session Recordings and Heatmaps can be used to visualize how users interact with specific pages or features of your user interface. With this, you can tell what attracts, distracts, and confuses your users. The qualitative information obtained from this can help you further during product management.

Hotjar has a free version, and the paid plans start at $32/mo.

Conclusion

Product managers are constantly pressured to keep products updated and make customers happy. This is no easy job. You may think you know what your customers want, but you can’t be sure unless you test. A/B testing is a scientific method that makes the job of PMs easier.

Always be on the lookout for areas of improvement within the product. Come up with your hypotheses, conduct A/B tests, and make data-informed decisions.

Want to run effective A/B tests code-free? Book a demo call with our team and get started!